artificial intelligence

Need Gift-Buying Advice for That Special Someone? Our AI SantaBot is Here to Help

Published

4 months agoon

By

admin

If you’ve been riding the wave of Bitcoin’s latest surge or watching your crypto wallet grow, the holidays might feel like the perfect time to turn those gains into memorable gifts.

But as any seasoned gifter knows, a big price tag doesn’t always guarantee a big impression.

That’s where SantaBot—our AI agent experiment designed to help you step up your gift-giving game—can help.

The project started with a simple question: Could AI decode personal chat messages to suggest more meaningful presents to your friends and loved ones? As it turns out, it can.

Designed to take the guesswork out of giving, SantaBot digs through conversation histories to build detailed personality profiles, gathering insights about their habits, preferences, and other aspects.

It even maps out Myers-Briggs and Enneagram personality types based on how people talk to each other.

The tool provides creative personalization ideas to make each gift truly unique.

For instance, you could gift your son a baseball bat engraved with his uniform number and initials or surprise your wife with a smartwatch featuring straps in her favorite color. These small, thoughtful touches can make a big impact.

Hands-On

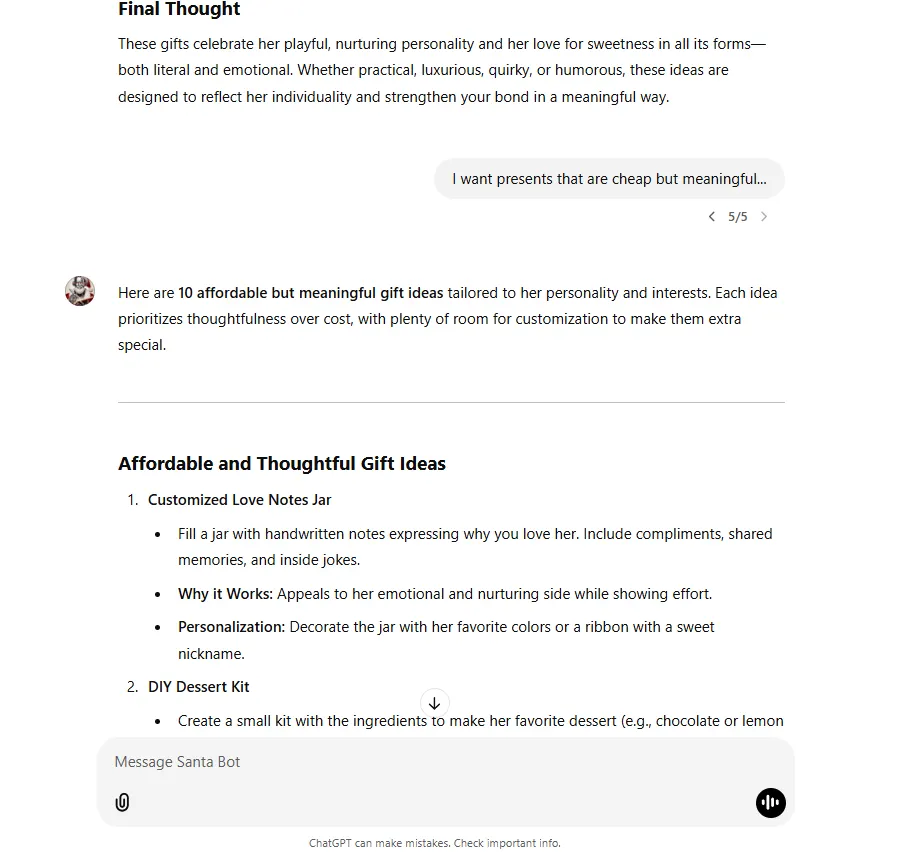

Before giving my bot to the world, I decided to give it a try. I uploaded my WhatsApp conversation with my wife and asked her to tell me the best gifts I could give her.

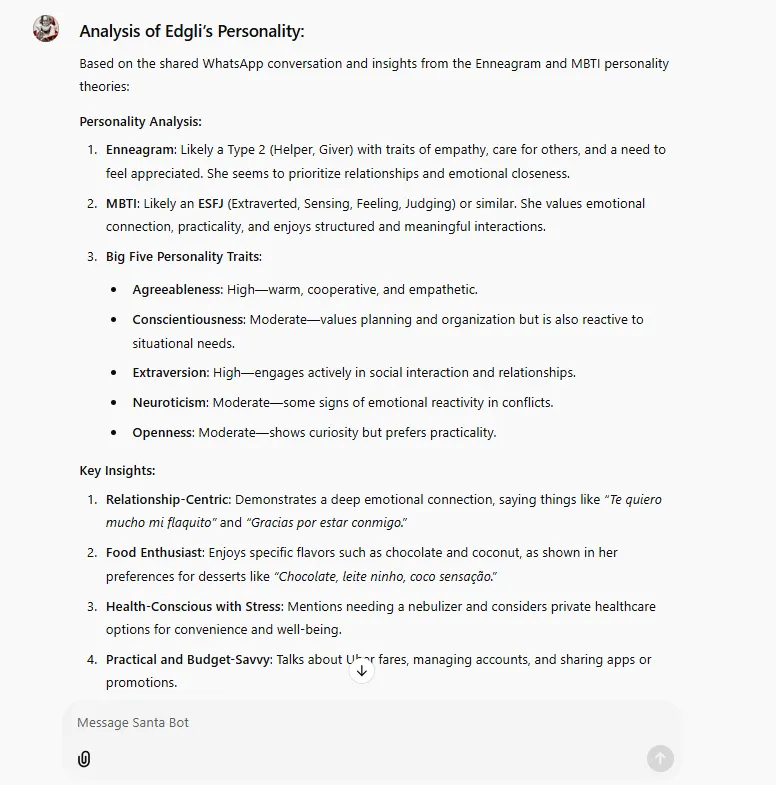

SantaBot psychoanalyzed my wife’s WhatsApp messages, and folks, we’ve got ourselves a certified Type 2 personality (Helper, Giver) with a major love for desserts and fitness.

According to the bot, she’s what personality experts call an ESFJ (Extraverted, Sensing, Feeling, Judging), basically someone who’d organize a group hug and then make sure everyone filled out a satisfaction survey afterward.

The personality analysis shows she ranks high in agreeableness and extraversion, moderate in neuroticism (their words, not mine—I value my life), and has a practical streak that somehow doesn’t apply to transportation choices.

The bot didn’t just stop at basic personality traits. It went full CIA analyst on our conversations, noting some interesting things like her use of “Te quiero mucho mi flaquito” (translation: “I love you, my skinny one”) to her appreciation for little details instead of luxurious things.

SantaBot even picked up on her Uber addiction faster than our credit card company.

It painted a picture of someone who’s health-conscious but won’t walk two blocks if there’s a car service available—which is not 100% but is easy to infer if the only thing you know about her is our conversation history.

Now, for the gift suggestions, these were some of the most exciting picks.:

For the practical side:

- A Miniature Chocolate Fountain with a customized base that says “Edgli’s [her nickname] Sweet Spot.” (considering she showed interest in buying one for future events)

- An “Uber Survival Kit” with a prepaid card (cheaper than buying her an actual car) or a mug with “Boss of Uber Requests” printed on it.

- A literal vault for her chocolate stash with “Keep Out, Unless You’re Amorsito” engraved on it—so I stay away from it.

For the fancy pants moments:

- A custom box with desserts from Venezuela and Brazil.

- A spa kit named “Aromas de Edgli” (much fancier than “Smell Like My Wife”).

- A leather planner embossed with “Amorsito’s Plans.”

- A Star Map Print showcasing the constellations of a meaningful date, like the day we met or the day our daughter was born.

And for when money is no object:

- A smartwatch to help her keep track of her fitness activity and burn calories.

- A designer handbag with her initials embossed.

- A weekend getaway featuring a chocolate-tasting experience in Gramado (basically a desert safari in one of Brazil’s best tourist places).

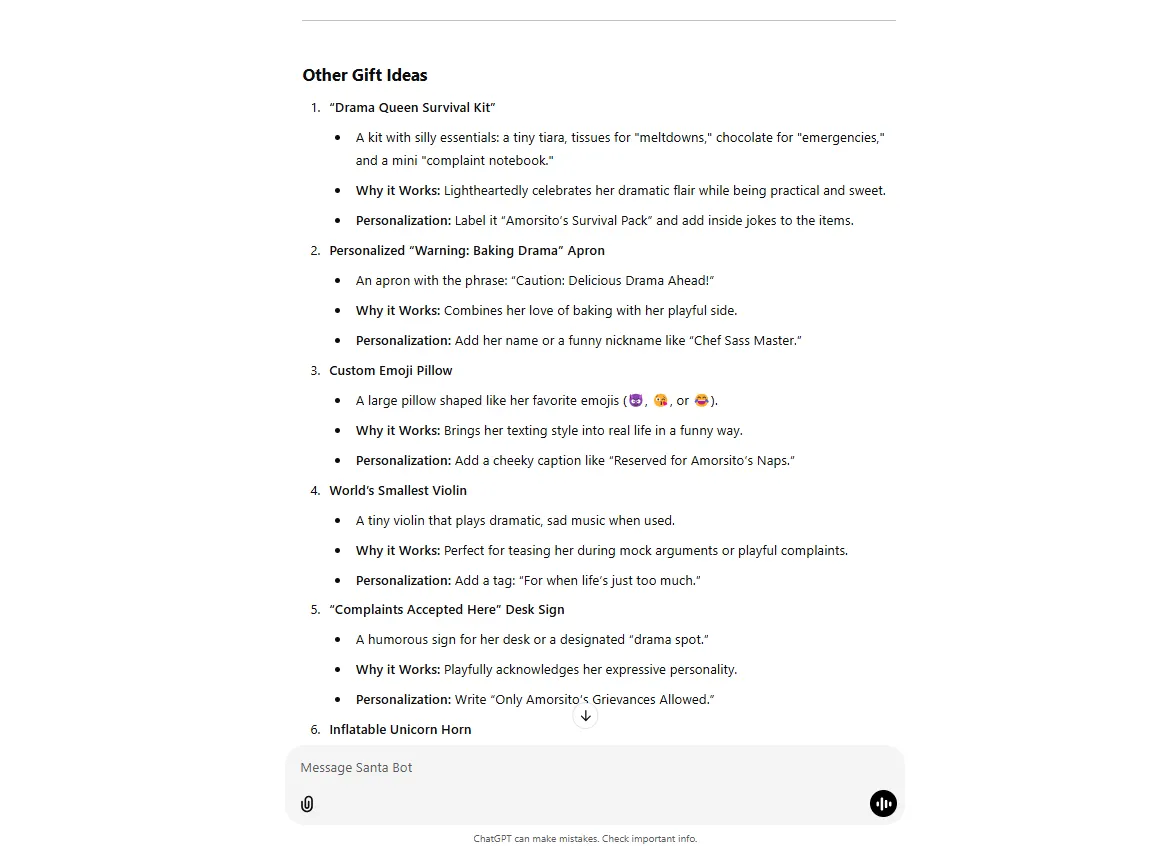

It also recommended some funny gift ideas, including a “Drama Queen Survival Kit” (which she would hate), a “Custom Emoji Pillow” (which she would love) and a personalized apron with a nickname like “Chef Sass Master”

I compared SantaBot head-to-head against regular ChatGPT to see how it stacked up.

The difference was clear—while standard ChatGPT played it safe with generic suggestions, our specialized version picked up on subtle hints.

It’s not like its suggestions were useless, rather than less personal.

How to Get Santa Bot’s Help

To use our tool, you must upload your conversation history and interact with the model, asking for recommendations.

You can then go with follow-up questions, asking for more suggestions, personalization ideas, providing more contextual or personalized information, etc. The more information the AI handles, the better the results should be.

Some good starting prompts can be as simple as “Please carefully analyze this conversation and tell me what presents she/he would like” to things as complex as “What are the best presents I could give to a person with an ENFP type of personality.”

You can also play with the tool and iterate with it. Once it provides a reply, you can ask for more suggestions, ask for funnier recommendations, ask for more romantic gift recommendations, etc. It all depends on your intentions and expectations.

Exporting chats is pretty straightforward, depending on which messaging app you use.

WhatsApp users can export chats from the app, though iMessage folks need to use tools like iMazing to get their conversation data. Similar options exist for Telegram, Facebook, Instagram, and TikTok users. Just google them.

Also, ensure you only upload text conversations, so export your data without photos, voice notes, or documents.

This, of course, means there are privacy concerns that you should address. SantaBot requires access to those conversations to create its detailed profiles.

Sharing such personal data without permission could be unethical. The fix isn’t perfect, but it works: Ask the other person for permission to use the conversation for an AI experiment. If they agree, you’re good to go.

If you don’t want to go that route, you can take other steps.

First, names should be anonymized in exported chats by replacing them with placeholders. For this, open your TXT file, select the option to edit and replace text (this will vary according to your text processor), and choose to change the name for the placeholder in every instance. Save that file and upload it to ChatGPT.

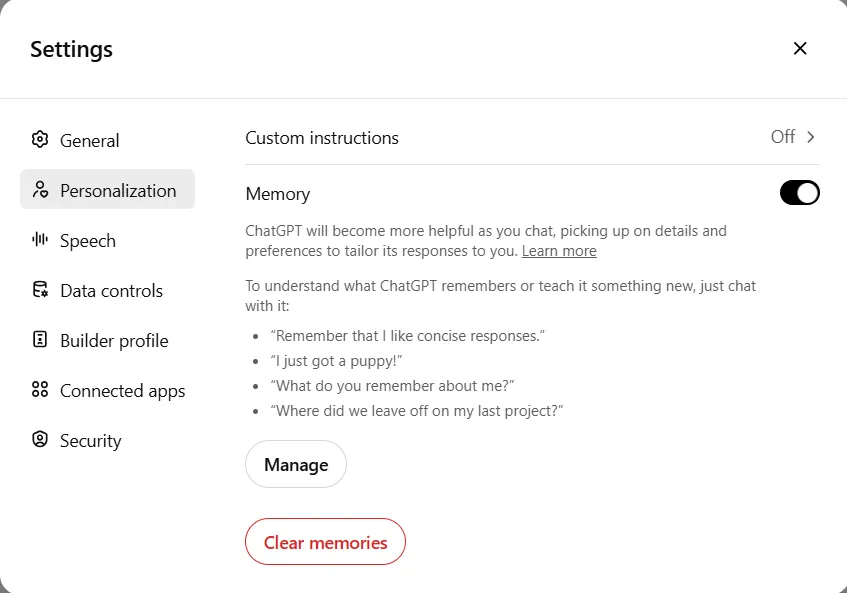

Second, ensure OpenAI cannot use that chat to train its models. For that, the first thing you can do is adjust your ChatGPT settings to disable memories. To do so, click on your profile picture in the top right corner of ChatGPT, go to settings, personalize it, and turn off “Memory.”

Alternatively, you can click on “Manage” after your conversation is done and delete any memory that could be created mentioning your latest chat.

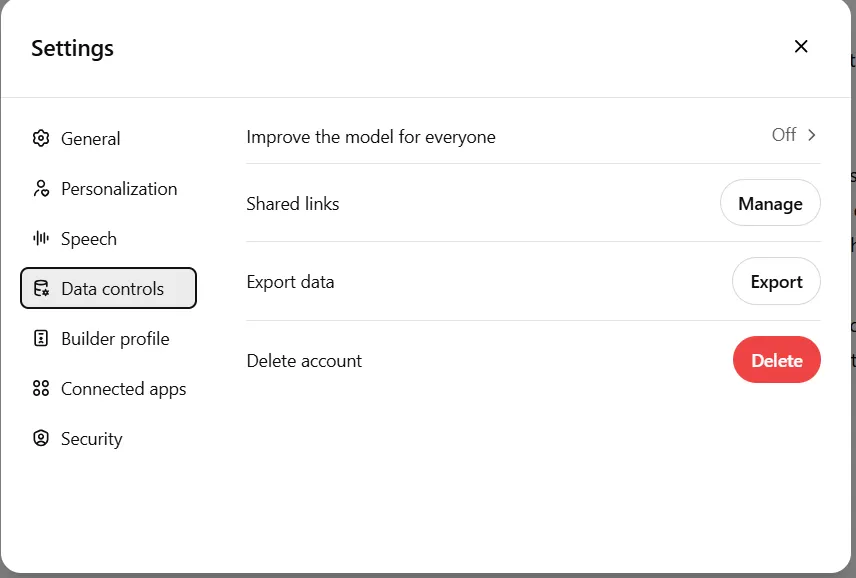

Additionally, you can prevent OpenAI from training its model with your conversation by blocking the capability of using your data—which is allowed by default.

To change that, go to Settings, click on Data controls, and turn off the option “Improve the model for everyone.” This sounds pretty, but in non-corpo language, it can be translated as “Let OpenAI use your conversations to train its models for free and probably charge you more once they get more powerful.”

Overall, building GPTs and specialized agents can bring practical solutions to everyday challenges, like the art of gifting.

Our AI may surprise you with clever ideas that turn ordinary presents into unforgettable gestures so you can be as successful in your family reunions as you think you are trading crypto.

At the very least, when the presents miss the mark, you’ll have something better to blame than your lack of creativity.

Edited by Sebastian Sinclair

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.

Source link

You may like

Bitcoin Trades Above $79K as Asia Markets React to Trump Tariffs

Memecoin platform Pump.fun brings livestream feature back to 5% of users

Bitcoin Price On The Verge Of Explosive 15% Breakout As Analyst Spots Triangle Formation

Strategy CEO Makes The Case For Corporate Bitcoin Adoption In MIT Keynote

Hackers Hammer Android and iPhone Users As Bank Account Attacks Surge 258% in One Year: Kaspersky

Cryptocurrencies to watch this week: Aptos, XRP, Solana

artificial intelligence

Elon Musk Folds X Into xAI, Creating a $113 Billion Juggernaut

Published

1 week agoon

March 29, 2025By

admin

In a consolidation of his tech businesses, X owner Elon Musk said in a post on Friday that xAI, the developers of Grok, had acquired X (formerly Twitter) in an all-stock transaction. According to Musk, the merger values xAI at $80 billion and X at $33 billion.

Musk bought Twitter for $44 billion in April 2022. By October 2024, Fidelity Investments adjusted the valuation of its stake in X, estimating the company’s overall value to be approximately $9.4 billion.

Though its valuation had recovered somewhat by December 2024, it was still down a whopping 77% from Musk’s purchase price. How Musk arrived at a $33 billion valuation, in other words, would make for interesting reading.

@xAI has acquired @X in an all-stock transaction. The combination values xAI at $80 billion and X at $33 billion ($45B less $12B debt).

Since its founding two years ago, xAI has rapidly become one of the leading AI labs in the world, building models and data centers at…

— Elon Musk (@elonmusk) March 28, 2025

Already the head of several companies, including SpaceX and Tesla, Musk launched xAI in July 2023, a year after purchasing Twitter. While he said the goal of xAI was to “understand reality,” an ongoing feud with former business partner OpenAI CEO Sam Altman was also a driving factor.

“Today, we officially take the step to combine the data, models, compute, distribution, and talent. This combination will unlock immense potential by blending xAI’s advanced AI capability and expertise with X’s massive reach,” Musk wrote on X Friday. “The combined company will deliver smarter, more meaningful experiences to billions of people while staying true to our core mission of seeking truth and advancing knowledge.”

Calling X “the digital town square,” Musk said the platform boasted over 600 million active users. However, Meta, which launched a rival social media platform, Threads, in July 2023, and Bluesky, which launched in February of that year, have been trying to woo away users.

Bluesky’s user count surpassed 27.44 million users in January 2025, many of whom had left Twitter after Musk’s takeover. At the same time, bolstered by its connections to Facebook and Instagram, Threads had over 320 million monthly active users as of February 2025.

Musk made headlines in July of last year when it was revealed that Grok would be trained on X user data. The setting can be disabled, but is on by default for user accounts.

Some social media users jeered today’s announcement, mocking the apparently sizable valuation boost in a deal between two of Musk’s own companies.

“I also sold my 2008 Honda Accord to myself for $1 million,” New York Times tech reporter Ryan Mac said on Bluesky.

“Wait a min…what’s different though… other than on paper?” an X user asked.

The merger, however, could serve a more pragmatic purpose. With X still carrying significant debt—$12 billion, according to Musk’s tweet—folding it into the xAI umbrella could give the company access to new investors, improved valuation, and a narrative pivot away from Twitter’s chaotic takeover.

“This will allow us to build a platform that doesn’t just reflect the world but actively accelerates human progress,” he said.

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.

Source link

artificial intelligence

Ancient Mystery or Modern Hoax? Experts Debunk Giza Pyramid Claims

Published

2 weeks agoon

March 25, 2025By

admin

Ever since the pyramids of Egypt rose from the desert over 4,000 years ago, people have wondered how they were built—sparking centuries of speculation, fringe theories, and wild claims involving lost technologies and extraterrestrials.

That speculation got a modern boost last fall when a Chinese research team claimed to have used radar to detect plasma bubbles above the Great Pyramid of Giza. These reports reignited online theories and alternative histories.

Building on that momentum, a group known as the Khafre Project, led by Professor Corrado Malanga from Italy’s University of Pisa and Researcher Filippo Biondi from the University of Strathclyde in Scotland, attracted attention last week with its own dramatic claims of a vast network of underground structures beneath the Pyramid of Khafre, reaching depths of up to 2,000 feet.

Accompanied by detailed graphics and viral videos, the group’s assertions quickly spread across social media, breathing new life into old mysteries.

X lit up with speculation, including theories that the chambers amplified Earth’s low-frequency electromagnetic waves—possibly functioning as an ancient power plant. Some even suggested the find could rewrite our understanding of the pyramids.

“The images suggest a hidden world under the feet of the Great Pyramids: halls and shafts that have waited millennia to be found,” technologist Brian Roemmele wrote in a blog post. “Such a scenario has an almost storybook allure as if turning the page on a chapter that historians didn’t know existed.”

Debunking the myth

However, Egyptologist and historian Flora Anthony wasn’t buying into the hype.

“Something seemed off, so I looked up the original source, read through it, and realized the paper had nothing to do with the images or claims being shared in the media,” she told Decrypt. “Turns out, the article isn’t peer-reviewed. Someone familiar with the journal where the report was published said they publish quickly and aren’t established in the field—which matters since peer review is important.”

The pyramids on the Giza Plateau—built during Egypt’s Fourth Dynasty between 2600 B.C. and 2500 B.C.—were royal tombs for the pharaohs Khufu, Khafre, and Menkaure.

The idea that extraterrestrials may have played a role in constructing the pyramids has long been a staple of fringe science and pop culture.

Proponents of this “Ancient Alien” theory point to the monuments’ precise alignment, massive scale, and engineering complexity as evidence that ancient civilizations couldn’t have built them alone.

“The people behind this aren’t scientists. One is a UFO researcher who believes aliens are interdimensional parasites that hijack human souls,” Anthony said. “The other writes conspiracy books about a lost, pre-dynastic Egyptian civilization and recently promoted a so-called ‘harmonic investigation’ of the Great Pyramid using a technology he claims to have patented.”

While their claims might sound impressive at first glance, there’s nothing solid underneath, Anthony added.

“None of it is peer-reviewed, credible, or based in real science,” she said. “It’s not science. It’s not history.”

Pseudoscience

Ancient Aliens theory, Anthony said, is rooted in pseudo-archaeology, eugenics, and historical racism, promoting the idea that African and Mesoamerican civilizations couldn’t have built monumental structures like the pyramids without help from extraterrestrials.

“These theories uphold white supremacy by pushing a false narrative of white superiority,” Anthony said. “No one questions how medieval European peasants—living in filth without basic sanitation—built intricate cathedrals. But when Africans or Mesoamericans build pyramids, suddenly it must be aliens.”

On March 16, The Khafre Project presented apparent evidence of five chambers and eight shafts, using annotated tomographic images and artist renderings to illustrate their findings.

Yet while social media continues to buzz with wild theories, Egyptologists, including Salima Ikram, a professor of Egyptology at the American University in Cairo, are unconvinced.

“It all sounds very improbable to me as most machinery cannot penetrate that deeply, and there is no data to evaluate this claim,” Ikram told Decrypt. “So far, it seems it is in the news, with no peer-reviewed paper or raw data to back this up. And the technology does not seem capable of what they claim.”

Ikram added that Egyptian authorities confirmed they had not granted the Khafre Project permission to conduct any work at the site.

Likewise, the fact-checking website Snopes investigated the Khafre Project’s claims and declared them false in a recent report.

“Despite the popularity of the claim, there is no evidence to support it,” the report said. “In addition, no credible news outlets or scientific publications have reported on this rumor.”

Digging to the truth

The idea of using radar technology to scan the pyramids is not new.

Radar technology has been used multiple times to scan the pyramids of Giza, most notably in 2016 as part of the ScanPyramids project, revealing hidden voids and structural anomalies within the ancient monuments.

In 2022, researchers Corrado Malanga and Filippo Biondi conducted a synthetic aperture radar scan on the Khufu Pyramid, which many suspect is the basis for the Khafre Project’s images.

According to Snopes, the Khafre Project’s research has not been peer-reviewed or corroborated by credible archaeologists, pointing to what the organization called “Malanga’s well-documented interest in UFO and alien abduction research as well as Dunn’s “power plant” theory.”

“Additionally, one of the most popular images being shared in support of the claim, depicting a cross-section of the pyramid and the alleged structures, was generated using artificial intelligence,” Snopes said. “Uploading the image to the AI-detection platform Hive Moderation resulted in a 99.9% chance the image was generated using AI.”

Ultimately, the story says more about our appetite for mystery than it does about any discovery beneath the pyramids.

Until actual evidence surfaces, the only thing buried beneath the Giza Plateau is the truth—and for now, it’s staying that way.

Edited by Sebastian Sinclair

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.

Source link

artificial intelligence

Swedish Film ‘Watch the Skies’ Set for US Release With AI ‘Visual Dubbing’

Published

2 weeks agoon

March 23, 2025By

admin

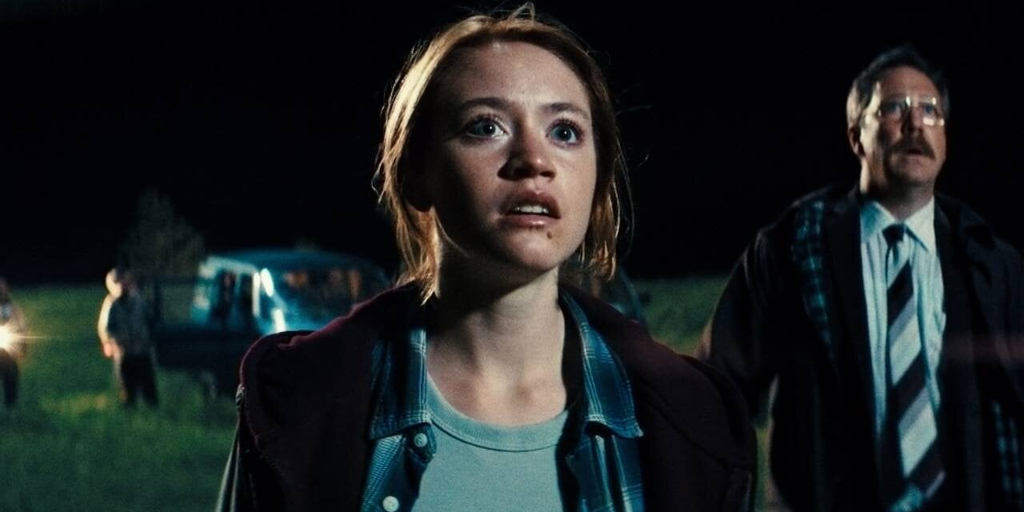

When Swedish UFO film “Watch the Skies” hits U.S. cinemas this May, audiences won’t be able to tell that it wasn’t made in English.

The film is the first full theatrical release to showcase “visual dubbing” technology from AI firm Flawless, which enables actors’ performances to be digitally lip-synced with foreign-language dubs.

“Watch the Skies” follows rebellious teenager Denise, who teams up with a club of UFO watchers to solve the mystery of her missing father. The film was shot in Swedish, and released there under the title “UFO Sweden.” However, for its U.S. release, the original cast have re-recorded their performances in English, with Flawless using its TrueSync machine learning technology to digitally alter their lip movements so that they sync up with the English dialogue.

“Flawless and their technology gives us the opportunity to release the film for a much larger audience,” said writer-director Victor Danell in a making-of featurette.

The filmmakers stress that the use of the technique has “full endorsement from SAG,” the actors’ union, which went on strike in 2023 amid concerns over the “threat” posed by AI to the profession.

“A lot of filmmakers and a lot of actors will be afraid of this technology at first, but we have the creative control, and to act out the film in English was a real exciting experience,” said Danell, adding that, “It’s still our movie, it’s still the actors’ performance, and that’s the key part.”

What is TrueSync?

In a 2023 presentation, Flawless co-founder Scott Mann explained that the company’s TrueSync technology uses deep learning to create a volumetric 3D representation of the actors’ faces throughout a film, which can then be altered to match the dubbed dialogue.

TrueSync was previously used on the 2022 film “Fall” to remove swearing for a PG-13 edit, while another of the company’s products, DeepEditor, enables an actor’s performance to be extracted from one scene and applied to another scene, without the need for reshoots.

The company has partnered with distributor XYZ Films to release a slate of features localized using TrueSync, including “Run Lola Run” director Tom Tykwer’s upcoming “The Light,” horror feature “Vincent Must Die,” and South Korean film “Smugglers.”

Edited by Andrew Hayward

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.

Source link

Bitcoin Trades Above $79K as Asia Markets React to Trump Tariffs

Memecoin platform Pump.fun brings livestream feature back to 5% of users

Bitcoin Price On The Verge Of Explosive 15% Breakout As Analyst Spots Triangle Formation

Strategy CEO Makes The Case For Corporate Bitcoin Adoption In MIT Keynote

Hackers Hammer Android and iPhone Users As Bank Account Attacks Surge 258% in One Year: Kaspersky

Cryptocurrencies to watch this week: Aptos, XRP, Solana

This Week in Crypto Games: ‘Off the Grid’ Token Live, Logan Paul ‘CryptoZoo’ Lawsuit Continues

Crypto Liquidations hit $600M as BTC Plunges Below $80K First Time in 25-days

Bitcoin (BTC) Price Posts Worst Q1 in a Decade, Raising Questions About Where the Cycle Stands

Stablecoins are the best way to ensure US dollar dominance — Web3 CEO

Chainlink (LINK) Targets Rebound To $19 — But Only If This Key Support Holds

NFT industry in trouble as activity slows, market collapses

US Tech Sector About To Witness ‘Economic Armageddon’ Amid Trump’s Tariffs, According to Wealth Management Exec

XRP’s Open Interest Surges Above $3 Billion, Will Price Follow?

This Week in Bitcoin: BTC Holds Steady as Trump’s Trade War Wrecks Stocks

Arthur Hayes, Murad’s Prediction For Meme Coins, AI & DeFi Coins For 2025

Expert Sees Bitcoin Dipping To $50K While Bullish Signs Persist

Aptos Leverages Chainlink To Enhance Scalability and Data Access

Bitcoin Could Rally to $80,000 on the Eve of US Elections

Sonic Now ‘Golden Standard’ of Layer-2s After Scaling Transactions to 16,000+ per Second, Says Andre Cronje

Institutional Investors Go All In on Crypto as 57% Plan to Boost Allocations as Bull Run Heats Up, Sygnum Survey Reveals

Crypto’s Big Trump Gamble Is Risky

Ripple-SEC Case Ends, But These 3 Rivals Could Jump 500x

Has The Bitcoin Price Already Peaked?

A16z-backed Espresso announces mainnet launch of core product

Xmas Altcoin Rally Insights by BNM Agent I

Blockchain groups challenge new broker reporting rule

The Future of Bitcoin: Scaling, Institutional Adoption, and Strategic Reserves with Rich Rines

Trump’s Coin Is About As Revolutionary As OneCoin

I’m Grateful for Trump’s Embrace of Bitcoin

Trending

24/7 Cryptocurrency News5 months ago

24/7 Cryptocurrency News5 months agoArthur Hayes, Murad’s Prediction For Meme Coins, AI & DeFi Coins For 2025

Bitcoin3 months ago

Bitcoin3 months agoExpert Sees Bitcoin Dipping To $50K While Bullish Signs Persist

24/7 Cryptocurrency News3 months ago

24/7 Cryptocurrency News3 months agoAptos Leverages Chainlink To Enhance Scalability and Data Access

Bitcoin5 months ago

Bitcoin5 months agoBitcoin Could Rally to $80,000 on the Eve of US Elections

Altcoins2 months ago

Altcoins2 months agoSonic Now ‘Golden Standard’ of Layer-2s After Scaling Transactions to 16,000+ per Second, Says Andre Cronje

Bitcoin5 months ago

Bitcoin5 months agoInstitutional Investors Go All In on Crypto as 57% Plan to Boost Allocations as Bull Run Heats Up, Sygnum Survey Reveals

Opinion5 months ago

Opinion5 months agoCrypto’s Big Trump Gamble Is Risky

Price analysis5 months ago

Price analysis5 months agoRipple-SEC Case Ends, But These 3 Rivals Could Jump 500x