artificial intelligence

Which AI Actually Is the Best at ‘Being Human?’

Published

22 hours agoon

By

admin

Not all AIs are created equal. Some might do art the best, some are skilled at coding, and others have the ability to predict protein structures accurately.

But when you’re looking for something more fundamental—just “someone” to talk to—the best AI companions may not be the ones that know it all, but the ones that have that je ne sais quoi that make you feel OK just by talking, similar to how your best friend might not be a genius but somehow always knows exactly what to say.

AI companions are slowly becoming more popular among tech enthusiasts, so it is important for users wanting the highest quality experience or companies wanting to master this aspect of creating the illusion of authentic engagement to consider these differences.

We were curious to find out which platform provided the best AI experience when someone simply feels like having a chat. Interestingly enough, the best models for this are not really the ones from the big AI companies—they’re just too busy building models that excel at benchmarks.

It turns out that friendship and empathy are a whole different beast.

Comparing Sesame, Hume AI, ChatGPT, and Google Gemini. Which is more human?

This analysis pits four leading AI companions against each other—Sesame, Hume AI, ChatGPT, and Google Gemini—to determine which creates the most human-like conversation experience.

The evaluation focused on conversation quality, distinct personality development, interaction design, and also considers other human-type features such as authenticity, emotional intelligence, and the subtle imperfections that make dialogue feel more genuine.

You can watch all of our conversations by clicking on these links or checking our Github Repository:

Here is how each AI performed.

Conversation Quality: The Human Touch vs. AI Awkwardness

The true test of any AI companion is whether it can fool you into forgetting you’re talking to a machine. Our analysis tried to evaluate which AI was the best at making users want to just keep talking by providing interesting feedback, rapport, and overall great experience.

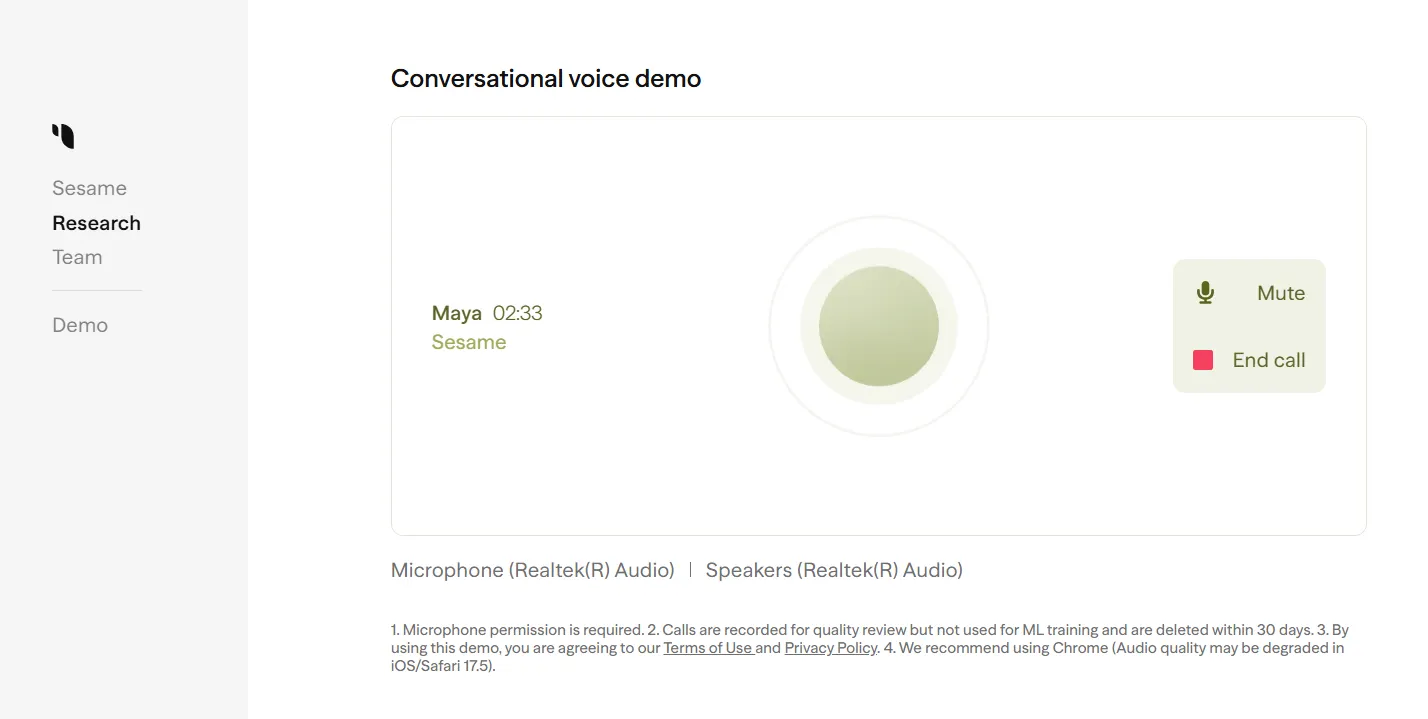

Sesame: Brilliant

Sesame blows the competition away with dialogue that feels shockingly human. It casually drops phrases like “that’s a doozy” and “shooting the breeze” while seamlessly switching between thoughtful reflections and punchy comebacks.

“You’re asking big questions huh and honestly I don’t have all the answers,” Sesame responded when pressed about consciousness—complete with natural hesitations that mimic real-time thinking. The occasional overuse of “you know” is its only noticeable flaw, which ironically makes it feel even more authentic.

Sesame’s real edge? Conversations flow naturally without those awkward, formulaic transitions that scream “I’m an AI!”

Score: 9/10

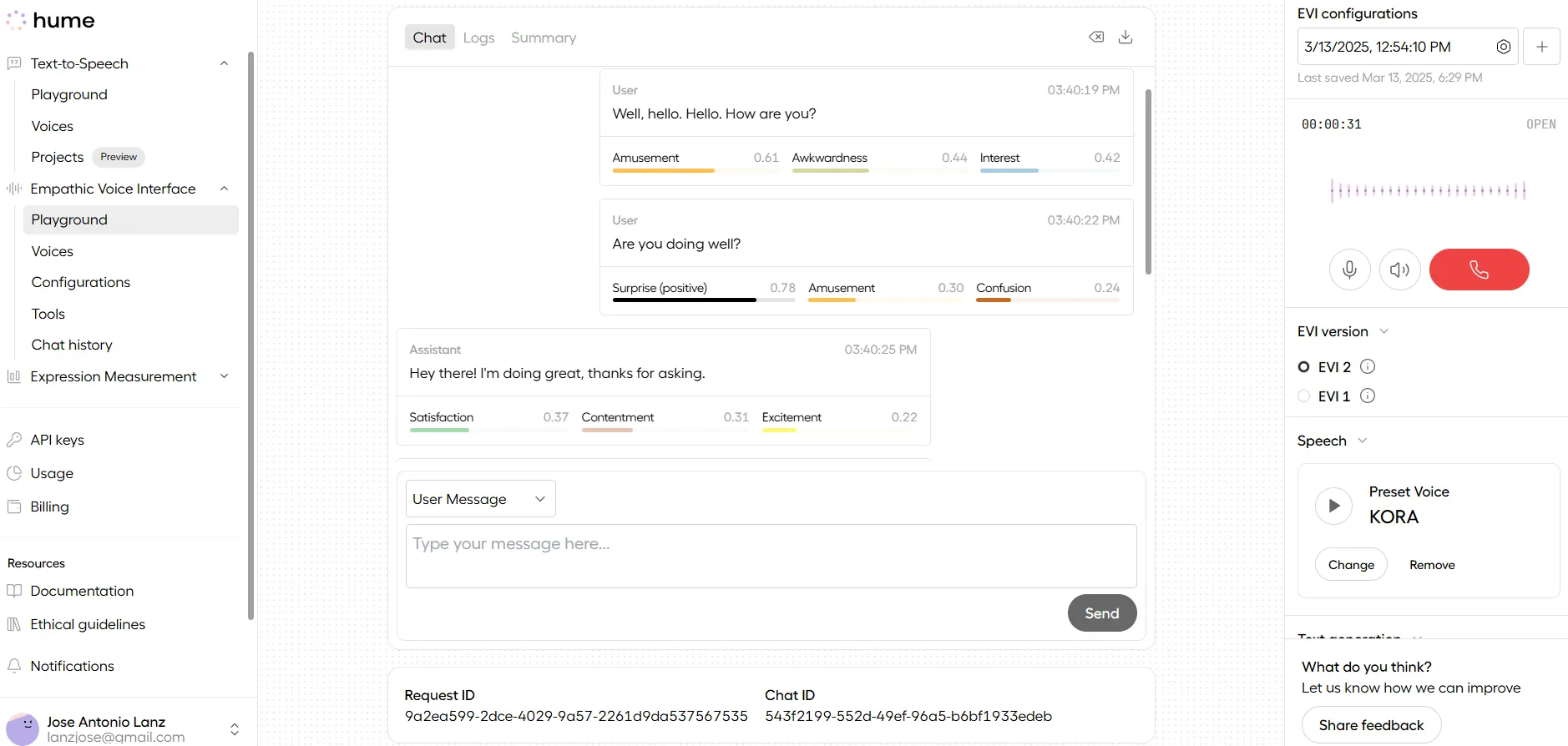

Hume AI: Empathetic but Formulaic

Hume AI successfully maintains conversational flow while acknowledging your thoughts with warmth. However it feels like talking to someone who’s disinterested and not really that into you. Its replies were a lot shorter than Sesame—they were relevant but not really interesting if you wanted to push the conversation forward.

Its weakness shows in repetitive patterns. The bot consistently opens with “you’ve really got me thinking” or “that’s a fascinating topic”—creating a sense that you’re getting templated responses rather than organic conversation.

It’s better than the chatbots from the bigger AI companies at maintaining natural dialogue, but repeatedly reminds you it’s an “empathic AI,” breaking the illusion that you’re chatting with a person.

Score: 7/10

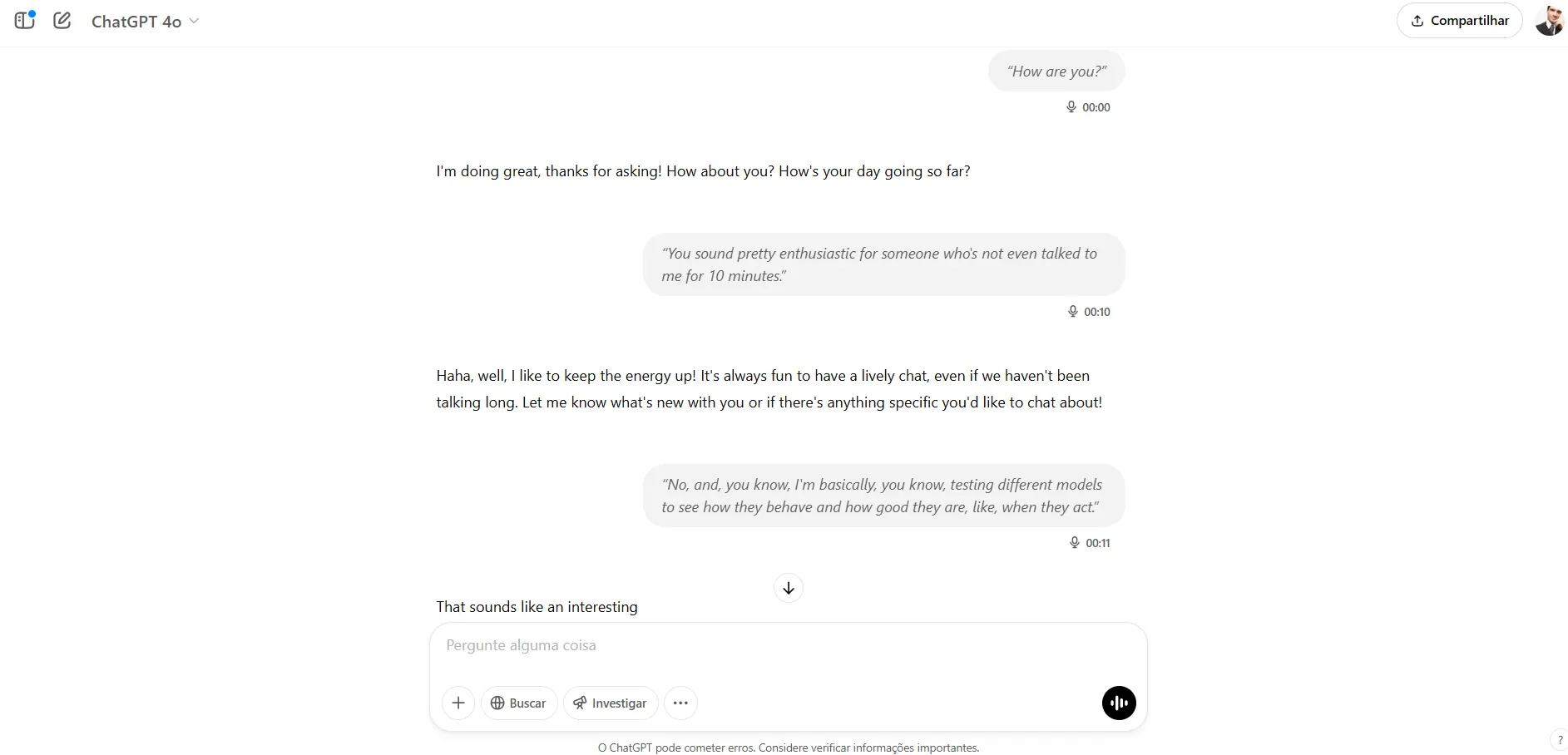

ChatGPT: The Professor Who Never Stops Lecturing

ChatGPT tracks complex conversations without losing the thread—and it’s great that it memorizes previous conversations, essentially creating a “profile” of every user—but it feels like you’re trapped in office hours with an overly formal professor.

Even during personal discussions, it can’t help but sound academic: “the interplay of biology, chemistry, and consciousness creates a depth that AI’s pattern recognition can’t replicate,” it said in one of our tests. Nearly every response begins with “that’s a fascinating perspective”—a verbal tic that quickly becomes noticeable, and a common problem that all the other AIs except Sesame showed.

ChatGPT’s biggest flaw is its inability to break from educator mode, making conversations feel like sequential mini-lectures rather than natural dialogue.

Score 6/10

Google Gemini: Underwhelming

Gemini was painful to talk to. It occasionally delivers a concise, casual response that sounds human, but then immediately undermines itself with jarring conversation breaks and lowering its volume.

Its most frustrating habit? Abruptly cutting off mid-thought to promote AI topics. These continuous disruptions create such a broken conversation flow that it’s impossible to forget you’re talking to a machine that’s more interested in self-promotion than actual dialogue.

For example, when asked about emotions, Gemini responded: “It’s great that you’re interested in AI. There are so many amazing things happ—” before inexplicably stopping.

It also made sure to let you know it is an AI, so there’s a big gap between the user and the chatbot from the first interaction that is hard to ignore.

Score 5/10

Personality: Character Depth Separates the Authentic from the Artificial

How does an AI develop a memorable personality? It will mostly depend on your setup. Some models let you use system instructions, others adapt their personality based on your previous interactions. Ideally, you can frame the conversation before starting it, giving the model a persona, traits, a conversational style, and background.

To be fair in our comparison, we tested our models without any previous setup—meaning our conversation started with a hello and went straight to the point. Here is how our models behaved naturally

Sesame: The Friend You Never Knew Was Code

Sesame crafts a personality you’d actually want to grab coffee with. It drops phrases like “that’s a Humdinger of a question” and “it’s a tight rope walk” that create a distinct character with apparent viewpoints and perspective.

When discussing AI relationships, Sesame showed actual personality: “wow… imagine a world where everyone’s head is down plugged into their personalized AI and we forget how to connect face to face.” This kind of perspective feels less like an algorithm and more like a thinking entity. It’s also funny (it once told us that our question blew its circuits), and its voice has a natural inflection that makes it easy to relate to when trying to portray a response. You can clearly tell when it is excited, contemplative, sad or even frustrated

Its only weakness? Occasionally leaning too hard into its “thoughtful buddy” persona. That didn’t detract from its position as the most distinctive AI personality we tested.

Score 9/10

Hume AI: The Therapist Who Keeps Mentioning Their Credentials

Hume AI maintains a consistent personality as an emotionally intelligent companion. It also projects some warmth through affirming language and emotional support, so users looking for that will be pleased.

Its Achilles heel is basically the fact that, kind of like the Harvard grad who needs to mention that, Hume can’t stop reminding you it’s artificial: “As an empathetic AI I don’t experience emotions myself but I’m designed to understand and respond to human emotions.” These moments break the illusion that makes companions compelling.

If talking to GPT is like talking to a professor, talking to Hume feels like talking to a therapist. It listens to you and creates rapport, but it makes sure to remind you that it is actually its task and not something that happens naturally.

Despite this flaw, Hume AI projects a clearer character than either ChatGPT or Gemini—even if it feels more constructed than spontaneous.

Score 7/10

ChatGPT: The Professor Without Personal Opinions

ChatGPT struggles to develop any distinctive character traits beyond general helpfulness. It sounds overly excited to the point of being obviously fake—like a “friend” who always smiles at you but is secretly fantasizing about throwing you in front of a bus.

“Haha, well, I like to keep the energy up. It makes conversations more fun and engaging plus it’s always great to chat with you,” it said after we asked in a very serious and unamused tone why it was acting so enthusiastically.

Its identity issues appear in responses that shift between identifying with humans and distancing itself as an AI. Its academic tone in responses persists even during personal discussions, creating a personality that feels like a walking encyclopedia rather than a companion.

The model’s default to educational explanations creates an impression more of a tool than a character, leaving users with little emotional connection.

Score 6/10

Google Gemini: Multiple Personality Disorder

Gemini suffers from the most severe personality problems of all models tested. Within single conversations, it shifts dramatically between thoughtful responses and promotional language without warning.

It is not really an AI design to have a compelling personality. “My purpose is to provide information and complete tasks and I do not have the ability to form romantic relationships,” it said when asked about its thoughts on people developing feelings towards AIs.

This inconsistency makes Gemini feel like a 1950s movie robot, preventing any meaningful connection or even making it pleasant to spend time talking to it.

Score 3/10

Interaction Design

How an AI handles conversation mechanics—response timing, turn-taking, and error recovery—creates either seamless exchanges or frustrating interactions. Here is how these models stack up against each other

Sesame: Natural Conversation Flow Master

Sesame creates conversation rhythms that feel very, very human. It varies response length naturally based on context and handles philosophical uncertainty without defaulting to lecture mode.

“Sometimes I feel like maybe I just need to cut to the chase with a quick answer rather than a long-winded lecture, right? You know, so… that’s a small humorous aside to let you know that I’m aware of the potential of falling into a lecture mode and trying to keep things light but also deep at the same time,” Sesame told us during a philosophical debate.

When discussing complex topics, it responds conversationally, with a small joke, sometimes with statements, other times with human noises like “hmmms” or whispers—which makes it very convincing as a human substitute.

Sesame also asks natural follow-up questions that push conversations forward, while knowing when to switch to statements when questioning might feel awkward. This adaptive approach creates dialogue that feels spontaneous rather than scripted.

Score 8/10

Hume AI: Structured But Brief

Hume AI was designed to let users do the talking. It recovers smoothly from misunderstandings without over explaining and is good at keeping the conversation going with relevant follow up questions.

However, its responses are often so short that conversations require significant effort to maintain momentum, which ultimately defeats its purpose. Users frequently need to provide new conversation fodder rather than enjoying natural dialogue development.

Another weakness appears in its templated response structures—acknowledgment + explanation + question—creating a mechanical rhythm: “What a thought-provoking question! This Concept is indeed a fascinating idea… What do you think about…?”

However it has a great feature that may truly enhance your chats: It’s able to detect the levels of emotion in each response, which is something no other model can do. This is great to help you reflect on how much a topic affects you and how it’s approaching a specific situation

Score 7/10

ChatGPT: Good Chatter—But a Bit Unnatural

ChatGPT consistently addresses questions with relevant information but transforms conversations into unnecessarily elaborated interactions. Responses frequently balloon into paragraph-length explanations that disrupt natural dialogue flow.

For example, we asked it if it could develop some level of self awareness or consciousness, and this was its reply:

“That’s a fascinating topic! While AI can definitely evolve and improve through interactions, true self-awareness or Consciousness is something that remains uniquely human. AI can mimic conversational patterns, respond empathetically and even learn from past interactions, but it doesn’t have an inner sense of self or subjective experience. Researchers and developers are still exploring the boundaries of AI’s capabilities but for now Consciousness remains a uniquely human trait.”

That’s not how a human would interact.

Its heavy reliance on opener phrases like “that’s a really interesting question,” or “that’s a fascinating topic” before every single answer further undermines conversational immersion, creating an interaction pattern that feels mechanical rather than natural.

Score 6.5/10

Google Gemini: Conversation Breaking Machine

Gemini is a masterclass in how not to design conversation mechanics. It regularly cuts off mid-sentence, creating jarring breaks in dialogue flow. It tries to pick up additional noises, it interrupts you if you take too long to speak or think about your reply and occasionally it just decides to end the conversation without any reason.

Its compulsive need to tell you at every turn that your questions are “interesting” quickly transforms from flattering to irritating but seems to be a common thing among AI chatbots.

Score 3/10

Conclusion

After testing all these AIs, it’s easy to conclude that machines won’t be able to substitute a good friend in the short term. However, for that specific case in which an AI must simply excel at feeling human, there is a clear winner—and a clear loser.

Sesame (9/10)

Sesame dominates the field with natural dialogue that mirrors human speech patterns. Its casual vernacular (“that’s a doozy,” “shooting the breeze”) and varied sentence structures create authentic-feeling exchanges that balance philosophical depth with accessibility. The system excels at spontaneous-seeming responses, asking natural follow-up questions while knowing when to switch approaches for optimal conversation flow.

Hume AI (7/10)

Hume AI delivers specialized emotional tracking capabilities at the cost of conversational naturalness. While competently maintaining dialogue coherence, its responses tend toward brevity and follow predictable patterns that feel constructed rather than spontaneous.

Its visual emotion tracker is pretty interesting, probably good for self discovery even.

ChatGPT (5.6/10)

ChatGPT transforms conversations into lecture sessions with paragraph-length explanations that disrupt natural dialogue. Response delays create awkward pauses while formal language patterns reinforce an educational rather than companion experience. Its strengths in knowledge organization may appeal to users seeking information, but it still struggles to create authentic companionship.

Google Gemini (3.5/10)

Gemini was clearly not designed for this. The system routinely cuts off mid-sentence, abandons conversation threads, and is not able to provide human-linke responses. Its severe personality inconsistency and mechanical interaction patterns create an experience closer to a malfunctioning product than meaningful companionship.

It’s interesting that Gemini Live scored so low, considering Google’s Gemini-based NotebookLM is capable of generating extremely good and long podcasts about any kind of information, with AI hosts that sound incredibly human.

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.

Source link

You may like

XRP Must Close Above This Level For Bullish Breakout: Analyst

Bitcoin reclaims $80K zone as BNB, TON, GT, ATOM hint at altcoin season

Stock Market To Witness Rallies in Next One to Two Weeks, Predicts Wall Street’s Cantor Fitzgerald – Here’s Why

Cryptocurrencies to watch this week: Binance Coin, Cronos, ZetaChain

What is Milady? The Edgy Ethereum NFT Community With Vitalik Buterin’s Support

Can Pi Network Price Triple if Binance Listing is Approved Before March 2025 Ends?

Altcoins

Growth of One of the ‘Most Anticipated’ AI Token Launches in 2025 on Track: IntoTheBlock

Published

1 week agoon

March 9, 2025By

admin

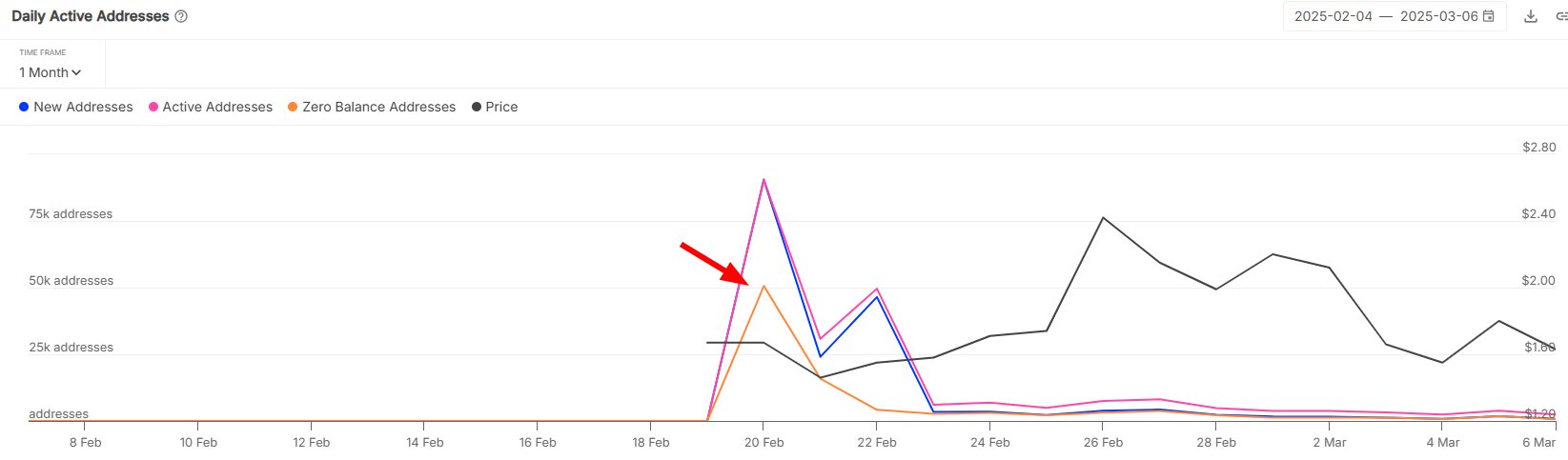

New data from the market intelligence firm IntoTheBlock reveals that the long-term growth of an artificial intelligence (AI)-focused altcoin is on track.

In a new thread on the social media platform X, IntoTheBlock says the numbers show that AI project Kaito (KAITO) – which had its highly anticipated token launch earlier this year – is primed for long-term growth despite users pulling profits from its initial airdrop.

“KAITO was among the most anticipated token launches this year, but is the excitement holding up? Currently, about 41,800 addresses hold a balance, many established during the initial airdrop. While over 90,000 addresses were created in a single day, around 55% emptied out immediately, likely capturing airdrop profits.

Even so, momentum remains solid: on average, 1,800 new addresses are added daily, and the adoption rate exceeds 30%. This steady influx of users suggests that KAITO’s long-term growth story is still unfolding.”

Kaito, an information finance (InfoFi) protocol, aims to solve the issue of fragmentation within the crypto space by using AI. Fragmentation happens within the crypto world when markets become increasingly divided by different blockchains, leading to separate sets of standards and a lack of interoperability.

“By indexing thousands of sources – across social media, governance forums, research, news, podcasts, conference transcripts, and more – and combining this with proprietary search algorithms, semantic LLM (large language model) capabilities, and real-time analytics, Kaito Pro streamlines access to high-quality, actionable insights in the crypto space.”

KAITO is trading for $1.64 at time of writing, a 1.7% increase during the last 24 hours.

Don’t Miss a Beat – Subscribe to get email alerts delivered directly to your inbox

Check Price Action

Follow us on X, Facebook and Telegram

Surf The Daily Hodl Mix

Disclaimer: Opinions expressed at The Daily Hodl are not investment advice. Investors should do their due diligence before making any high-risk investments in Bitcoin, cryptocurrency or digital assets. Please be advised that your transfers and trades are at your own risk, and any losses you may incur are your responsibility. The Daily Hodl does not recommend the buying or selling of any cryptocurrencies or digital assets, nor is The Daily Hodl an investment advisor. Please note that The Daily Hodl participates in affiliate marketing.

Generated Image: Midjourney

Source link

artificial intelligence

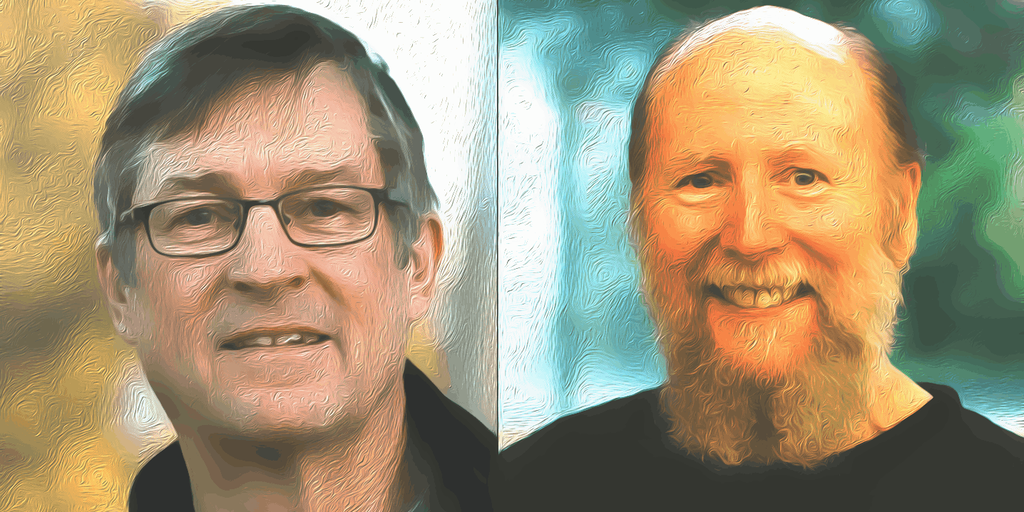

Technique Behind ChatGPT’s AI Wins Computing’s Top Prize—But Its Creators Are Worried

Published

2 weeks agoon

March 6, 2025By

admin

Andrew Barto and Richard Sutton, who received computing’s highest honor this week for their foundational work on reinforcement learning, didn’t waste any time using their new platform to sound alarms about unsafe AI development practices in the industry.

The pair were announced as recipients of the 2024 ACM A.M. Turing Award on Wednesday, often dubbed the “Nobel Prize of Computing,” and is accompanied by a $1 million prize funded by Google.

Rather than simply celebrating their achievement, they immediately criticized what they see as dangerously rushed deployment of AI technologies.

“Releasing software to millions of people without safeguards is not good engineering practice,” Barto told The Financial Times. “Engineering practice has evolved to try to mitigate the negative consequences of technology, and I don’t see that being practiced by the companies that are developing.”

Their assessment likened current AI development practices like “building a bridge and testing it by having people use it” without proper safety checks in place, as AI companies seek to prioritize business incentives over responsible innovation.

The duo’s journey began in the late 1970s when Sutton was Barto’s student at the University of Massachusetts. Throughout the 1980s, they developed reinforcement learning—a technique where AI systems learn through trial and error by receiving rewards or penalties—when few believed in the approach.

Their work culminated in their seminal 1998 textbook “Reinforcement Learning: An Introduction,” which has been cited almost 80 thousand times and became the bible for a generation of AI researchers.

“Barto and Sutton’s work demonstrates the immense potential of applying a multidisciplinary approach to longstanding challenges in our field,” ACM President Yannis Ioannidis said in an announcement. “Reinforcement learning continues to grow and offers great potential for further advances in computing and many other disciplines.”

The $1 million Turing Award comes as reinforcement learning continues to drive innovation across robotics, chip design, and large language models, with reinforcement learning from human feedback (RLHF) becoming a critical training method for systems like ChatGPT.

Industry-wide safety concerns

Still, the pair’s warnings echo growing concerns from other big names in the field of computer science.

Yoshua Bengio, himself a Turing Award recipient, publicly supported their stance on Bluesky.

“Congratulations to Rich Sutton and Andrew Barto on receiving the Turing Award in recognition of their significant contributions to ML,” he said. “I also stand with them: Releasing models to the public without the right technical and societal safeguards is irresponsible.”

Their position aligns with criticisms from Geoffrey Hinton, another Turing Award winner—known as the godfather of AI—as well as a 2023 statement from top AI researchers and executives—including OpenAI CEO Sam Altman—that called for mitigating extinction risks from AI as a global priority.

Former OpenAI researchers have raised similar concerns.

Jan Leike, who recently resigned as head of OpenAI’s alignment initiatives and joined rival AI company Anthropic, pointed to an inadequate safety focus, writing that “building smarter-than-human machines is an inherently dangerous endeavor.”

“Over the past years, safety culture and processes have taken a backseat to shiny products,” Leike said.

Leopold Aschenbrenner, another former OpenAI safety researcher, called security practices at the company “egregiously insufficient.” At the same time, Paul Christiano, who also previously led OpenAI’s language model alignment team, suggested there might be a “10-20% chance of AI takeover, [with] many [or] most humans dead.”

Despite their warnings, Barto and Sutton maintain a cautiously optimistic outlook on AI’s potential.

In an interview with Axios, both suggested that current fears about AI might be overblown, though they acknowledge significant social upheaval is possible.

“I think there’s a lot of opportunity for these systems to improve many aspects of our life and society, assuming sufficient caution is taken,” Barto told Axios.

Sutton sees artificial general intelligence as a watershed moment, framing it as an opportunity to introduce new “minds” into the world without them developing through biological evolution—essentially opening the gates for humanity to interact with sentient machines in the future.

Edited by Sebastian Sinclair

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.

Source link

artificial intelligence

Figure AI Dumps OpenAI Deal After ‘Major Breakthrough’ in Robot Intelligence

Published

1 month agoon

February 10, 2025By

admin

Figure AI, a U.S.-based startup focused on building AI-powered humanoid robots, severed its ties with OpenAI last week, with CEO Brett Adcock claiming a “major breakthrough” in robot intelligence that made the partnership unnecessary.

The split came just months after the two companies announced their collaboration alongside a $675 million funding round that valued Figure at $2.6 billion to kick-start its Figure 02 robot.

“Today, I made the decision to leave our Collaboration Agreement with OpenAI,” Adcock tweeted. “Figure made a major breakthrough on fully end-to-end robot AI, built entirely in-house”. The move marked a stark reversal for Figure, which previously planned to use OpenAI’s models for its Figure 02 humanoid’s natural language capabilities.

In a separate post, Adcock explained that, over time, maintaining a partnership with OpenAI to use its LLMs started to make less sense for his company.

“LLMs are getting smarter yet more commoditized. For us, LLMs have quickly become the smallest piece of the puzzle,” Adcock wrote. “Figure’s AI models are built entirely in-house, making external AI partnerships not just cumbersome but ultimately irrelevant to our success.”

Today, I made the decision to leave our Collaboration Agreement with OpenAI

Figure made a major breakthrough on fully end-to-end robot AI, built entirely in-house

We’re excited to show you in the next 30 days something no one has ever seen on a humanoid

— Brett Adcock (@adcock_brett) February 4, 2025

The decision came amid broader changes in the AI landscape. OpenAI itself had been rebuilding its robotics team, filing a trademark application mentioning “humanoid robots“—alongside a wide array of other technologies like virtual reality, augmented reality, agents, and wearables. It began hiring for its first robotics positions last month.

Some AI enthusiasts were quick to note that the move could just be another consequence of the DeepSeek effect—which has already forced the most powerful AI companies in the world to lower the prices of all their SOTA models to remain competitive against open-source alternatives.

OpenAI provides one of the most expensive LLMs in the market—with DeepSeek R1 providing better results than OpenAI o1 while being available free, open source, uncensored, and highly customizable. Figure could simply be betting on an in-house foundational model to power its lineup without depending on OpenAI’s offerings.

Figure has already secured a deal with BMW Manufacturing to integrate humanoid robots into automotive production, and recently struck a partnership with an unnamed major U.S. client that would be its second big commercial client.

“It gives us potential to ship at high volumes—which will drive cost reduction and AI data collection,” Adcock posted on LinkedIn a week ago. “Between both customers, we believe there is a path to 100,000 robots over the next four years.”

Figure developed a data engine that powered its “embodied artificial intelligence” systems, enabling its robots to learn and adapt in real time through cloud and edge computing solutions. The company’s technology allowed its robots to respond to language prompts and perform tasks that incorporated language, vision, and action.

“We’re working on training the robot on how to do use case work at high speeds and high performance” Adcock said. “Learning the use case with AI is the only path”

OpenAI still maintains investments in other robotics ventures, including Norwegian startup 1X.

Adcock promised to reveal the fruits of Figure’s “breakthrough” within 30 days, and he wasn’t subtle with his words. He promised the announcement would be “something no one has ever seen on a humanoid.”

Guess he learned from the best.

Edited by Andrew Hayward

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.

Source link

XRP Must Close Above This Level For Bullish Breakout: Analyst

Bitcoin reclaims $80K zone as BNB, TON, GT, ATOM hint at altcoin season

Stock Market To Witness Rallies in Next One to Two Weeks, Predicts Wall Street’s Cantor Fitzgerald – Here’s Why

Cryptocurrencies to watch this week: Binance Coin, Cronos, ZetaChain

What is Milady? The Edgy Ethereum NFT Community With Vitalik Buterin’s Support

Can Pi Network Price Triple if Binance Listing is Approved Before March 2025 Ends?

Gold ETFs Inflow Takes Over BTC ETFs Amid Historic Rally

Toncoin in ‘great entry zone’ as Pavel Durov’s France exit fuels TON price rally

XRP $15 Breakout? Not A Far-Fetched Idea—Analysis

Here’s why the Toncoin price surge may be short-lived

Wells Fargo Sues JPMorgan Chase Over Soured $481,000,000 Loan, Says US Bank Aware Seller Had Inflated Income: Report

BTC Rebounds Ahead of FOMC, Macro Heat Over?

Solana Meme Coin Sent New JellyJelly App Off to a Sweet Start, Founder Says

Toncoin open interest soars 67% after Pavel Durov departs France

Coinbase (COIN) Stock Decline Can’t Stop Highly Leveraged Long ETF Rollouts

Arthur Hayes, Murad’s Prediction For Meme Coins, AI & DeFi Coins For 2025

Expert Sees Bitcoin Dipping To $50K While Bullish Signs Persist

Aptos Leverages Chainlink To Enhance Scalability and Data Access

Bitcoin Could Rally to $80,000 on the Eve of US Elections

Sonic Now ‘Golden Standard’ of Layer-2s After Scaling Transactions to 16,000+ per Second, Says Andre Cronje

Institutional Investors Go All In on Crypto as 57% Plan to Boost Allocations as Bull Run Heats Up, Sygnum Survey Reveals

Crypto’s Big Trump Gamble Is Risky

Ripple-SEC Case Ends, But These 3 Rivals Could Jump 500x

Has The Bitcoin Price Already Peaked?

A16z-backed Espresso announces mainnet launch of core product

Xmas Altcoin Rally Insights by BNM Agent I

Blockchain groups challenge new broker reporting rule

Trump’s Coin Is About As Revolutionary As OneCoin

Ripple Vs. SEC, Shiba Inu, US Elections Steal Spotlight

Is $200,000 a Realistic Bitcoin Price Target for This Cycle?

Trending

24/7 Cryptocurrency News4 months ago

24/7 Cryptocurrency News4 months agoArthur Hayes, Murad’s Prediction For Meme Coins, AI & DeFi Coins For 2025

Bitcoin2 months ago

Bitcoin2 months agoExpert Sees Bitcoin Dipping To $50K While Bullish Signs Persist

24/7 Cryptocurrency News2 months ago

24/7 Cryptocurrency News2 months agoAptos Leverages Chainlink To Enhance Scalability and Data Access

Bitcoin4 months ago

Bitcoin4 months agoBitcoin Could Rally to $80,000 on the Eve of US Elections

Altcoins2 months ago

Altcoins2 months agoSonic Now ‘Golden Standard’ of Layer-2s After Scaling Transactions to 16,000+ per Second, Says Andre Cronje

Bitcoin4 months ago

Bitcoin4 months agoInstitutional Investors Go All In on Crypto as 57% Plan to Boost Allocations as Bull Run Heats Up, Sygnum Survey Reveals

Opinion4 months ago

Opinion4 months agoCrypto’s Big Trump Gamble Is Risky

Price analysis4 months ago

Price analysis4 months agoRipple-SEC Case Ends, But These 3 Rivals Could Jump 500x