artificial intelligence

Pride, Prejudice and Pixels: Meet an AI Elizabeth Bennet at Jane Austen’s House

Published

2 months agoon

By

adminIt is a truth universally acknowledged that a single man in possession of a good fortune must be in want of an AI.

At least, that’s what Jane Austen’s House thinks. The museum in Hampshire, England—where the author lived and worked—has teamed up with AI firm StarPal and the University for the Creative Arts (UCA) to create “Lizzy,” an AI avatar based on Austen’s Pride and Prejudice heroine Elizabeth Bennet.

“It is so exciting to finally be able to lift Elizabeth Bennet off the page and to be able to have real-time conversations with her,” said Sophy Smith, director of games and creative technology at UCA, in a press release.

“This technology has the potential to transform experiences within both the museum and heritage, as well the education sector,” Smith added, adding that the technology will enable museum visitors to “engage directly” with Austen’s character.

Creating Lizzy

The first step in creating an AI avatar was to pick a suitable fictional character.

“There are lots of AI avatars, but these were people that existed in the past,” Lauren Newport-Quinn, project manager for UCA’s Games and Innovation Nexus, told Decrypt. “We thought it’d be nice to do something with a fictional character, where no one’s been able to pick their brains before.”

The team debated which character would be best suited to the project, which needed “someone who has a lot to say—has some strong opinions—who’s very well rounded and could give good advice,” Newport-Quinn said. “That’s when we landed on Elizabeth Bennett.”

To create Lizzy’s knowledge bank, StarPal and UCA turned to a selection of novels, manuscripts, and period-accurate information curated with the help of Jane Austen’s House researchers.

“It was basically anything that was instructed by the museum director as personal knowledge that she should have,” Newport-Quinn said. As well as Pride and Prejudice itself, Lizzy draws on “scholarly studies on Pride and Prejudice, the works of Jane Austen as a whole, and studies on her life.” This was supplemented with demographic and lifestyle information from the Regency era.

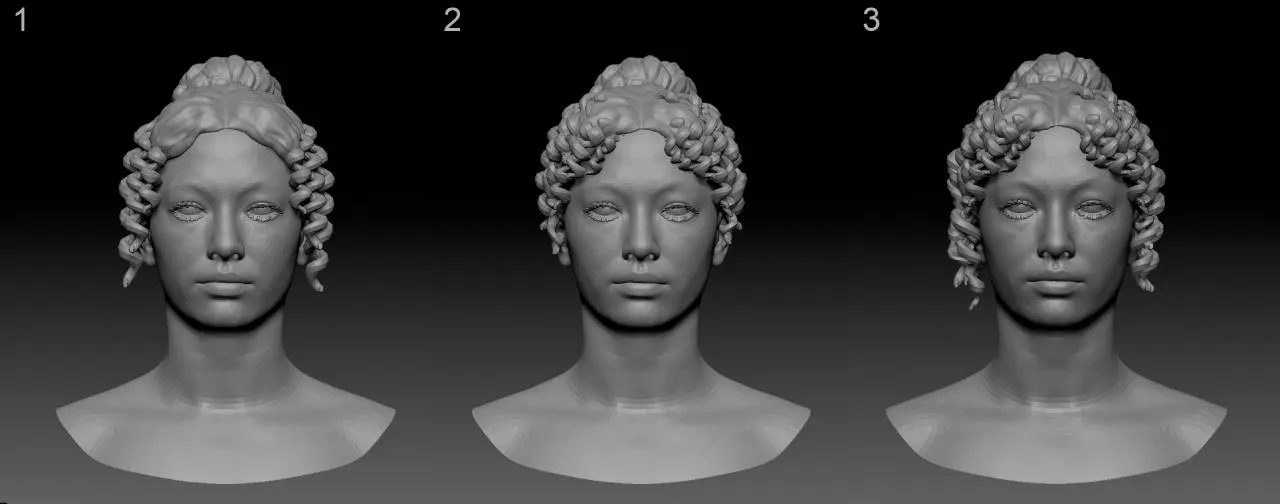

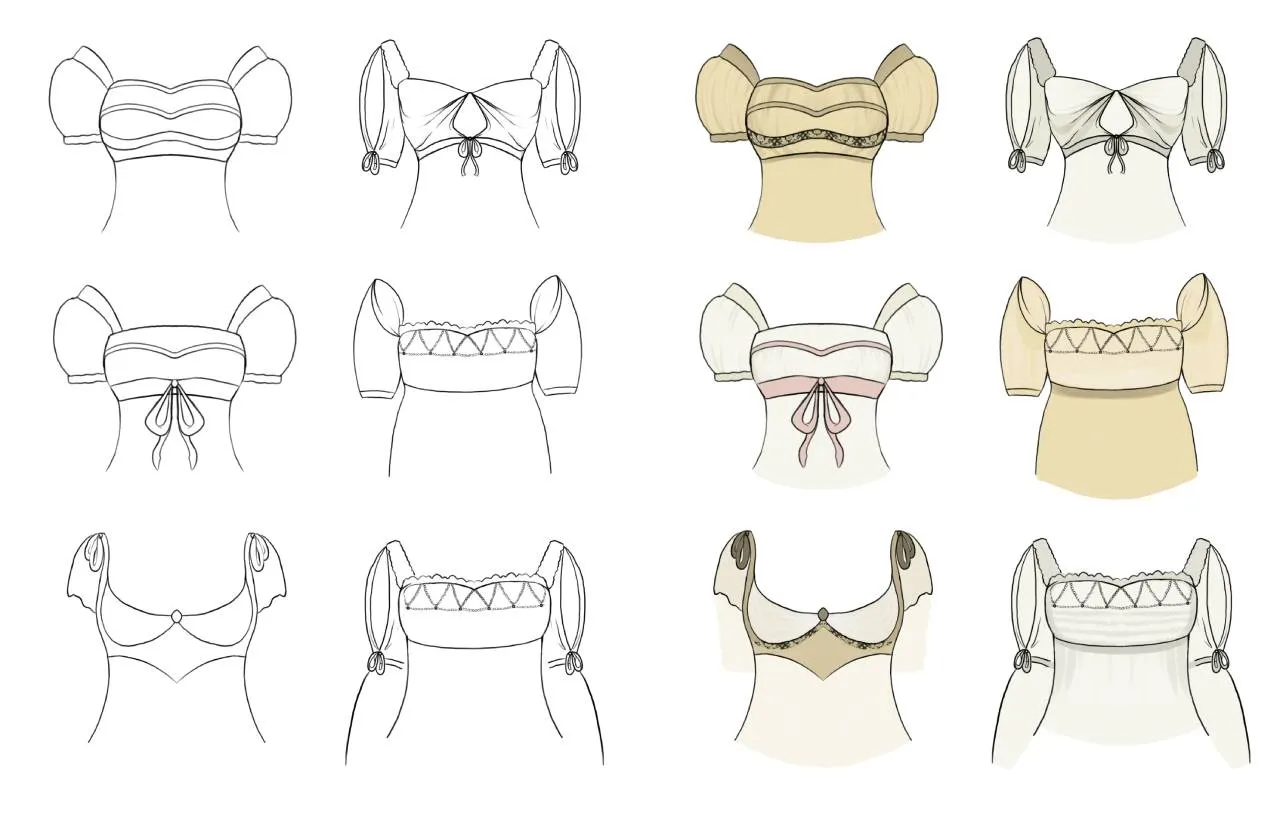

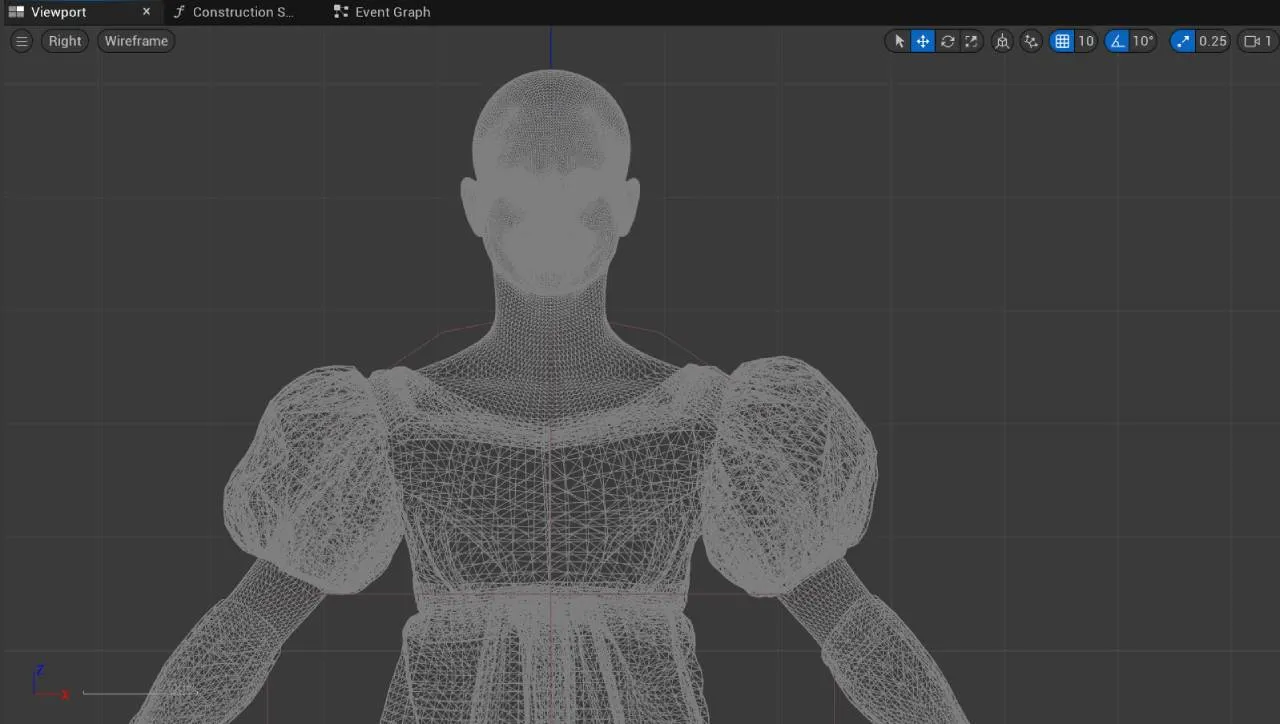

The avatar’s dress and hair. meanwhile, were designed and created by students from the Games Arts and Digital Fashion courses at UCA, drawing on fashion templates from the Regency period.

“It was exciting bringing to life the cut of dress, accessories, and embroideries—all inspired by historical drawings and descriptions,” said UCA MA Digital Fashion student Anya Haber, who created Lizzy’s dress in 3D. She added that”it showed how useful technology can be in a historical setting, letting fans engage with fictional characters.”

Conversations with AI avatars could be “an easier way to learn for certain learning styles,” Newport-Quinn explained. “If you’re not a visual passive learner, where reading something you don’t digest that information as well—if you have a conversation with someone, that might be able to enhance your level of knowledge on that topic.”

Smith affirmed that AI avatars could be used as educational tools, where “instead of only having text-based revision guides, students can now learn about literature by speaking directly to the characters.”

AI avatars

AI avatars are increasingly being used to bring fictional characters, dead celebrities, and even digital twins to life. Earlier this year, AI firm Soul Machines created an avatar of Marilyn Monroe, while London-based developer Synthesia has created “Personal Avatars” that enable users to create a digital video duplicate of themselves that can be used on social media, in marketing campaigns and training videos.

British actor and musician FKA Twigs revealed in a U.S. Senate hearing earlier this year that she had created just such a digital twin, explaining that it is “not only trained in my personality, but […] can also use my exact tone of voice to speak many languages,” and that it could help her reach a more global fanbase.

But the question of who controls AI-generated likenesses has raised concerns. In October, a bipartisan group of U.S. Senators introduced the “No Fakes Act,” which aims to outlaw the creation of AI-generated likenesses without consent.

Edited by Ryan Ozawa.

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.

Source link

You may like

Jason "Spaceboi" Lowery's Bitcoin "Thesis" Is Incoherent Gibberish

Bankrupt Crypto Exchange FTX Set To Begin Paying Creditors and Customers in Early 2025, Says CEO

Top crypto traders’ picks for explosive growth by 2025

3 Tokens Ready to 100x After XRP ETF Gets Approval

Gary Gensler’s Departure Is No Triumph For Bitcoin

Magic Eden Token Airdrop Date Set as Pre-Market Value Hits $562 Million

AI

Decentralized AI Project Morpheus Goes Live on Mainnet

Published

4 days agoon

November 19, 2024By

admin

Morpheus went live on a public testnet, or simulated experimental environment, in July. The project promises personal AIs, also known as “smart agents,” that can empower individuals much like personal computers and search engines did in decades past. Among other tasks, agents can “execute smart contracts, connecting to users’ Web3 wallets, DApps, and smart contracts,” the team said.

Source link

artificial intelligence

How the US Military Says Its Billion Dollar AI Gamble Will Pay Off

Published

6 days agoon

November 16, 2024By

admin

War is more profitable than peace, and AI developers are eager to capitalize by offering the U.S. Department of Defense various generative AI tools for the battlefields of the future.

The latest evidence of this trend came last week when Claude AI developer Anthropic announced that it was partnering with military contractor Palantir and Amazon Web Services (AWS) to provide U.S. intelligence and the Pentagon access to Claude 3 and 3.5.

Anthropic said Claude will give U.S. defense and intelligence agencies powerful tools for rapid data processing and analysis, allowing the military to perform faster operations.

Experts say these partnerships allow the Department of Defense to quickly adopt advanced AI technologies without needing to develop them internally.

“As with many other technologies, the commercial marketplace always moves faster and integrates more rapidly than the government can,” retired U.S. Navy Rear Admiral Chris Becker told Decrypt in an interview. “If you look at how SpaceX went from an idea to implementing a launch and recovery of a booster at sea, the government might still be considering initial design reviews in that same period.”

Becker, a former Commander of the Naval Information Warfare Systems Command, noted that integrating advanced technology initially designed for government and military purposes into public use is nothing new.

“The internet began as a defense research initiative before becoming available to the public, where it’s now a basic expectation,” Becker said.

Anthropic is only the latest AI developer to offer its technology to the U.S. government.

Following the Biden Administration’s memorandum in October on advancing U.S. leadership in AI, ChatGPT developer OpenAI expressed support for U.S. and allied efforts to develop AI aligned with “democratic values.” More recently, Meta also announced it would make its open-source Llama AI available to the Department of Defense and other U.S. agencies to support national security.

During Axios’ Future of Defense event in July, retired Army General Mark Milley noted advances in artificial intelligence and robotics will likely make AI-powered robots a larger part of future military operations.

“Ten to fifteen years from now, my guess is a third, maybe 25% to a third of the U.S. military will be robotic,” Milley said.

In anticipation of AI’s pivotal role in future conflicts, the DoD’s 2025 budget requests $143.2 billion for Research, Development, Test, and Evaluation, including $1.8 billion specifically allocated to AI and machine learning projects.

Protecting the U.S. and its allies is a priority. Still, Dr. Benjamin Harvey, CEO of AI Squared, noted that government partnerships also provide AI companies with stable revenue, early problem-solving, and a role in shaping future regulations.

“AI developers want to leverage federal government use cases as learning opportunities to understand real-world challenges unique to this sector,” Harvey told Decrypt. “This experience gives them an edge in anticipating issues that might emerge in the private sector over the next five to 10 years.

He continued: “It also positions them to proactively shape governance, compliance policies, and procedures, helping them stay ahead of the curve in policy development and regulatory alignment.”

Harvey, who previously served as chief of operations data science for the U.S. National Security Agency, also said another reason developers look to make deals with government entities is to establish themselves as essential to the government’s growing AI needs.

With billions of dollars earmarked for AI and machine learning, the Pentagon is investing heavily in advancing America’s military capabilities, aiming to use the rapid development of AI technologies to its advantage.

While the public may envision AI’s role in the military as involving autonomous, weaponized robots advancing across futuristic battlefields, experts say that the reality is far less dramatic and more focused on data.

“In the military context, we’re mostly seeing highly advanced autonomy and elements of classical machine learning, where machines aid in decision-making, but this does not typically involve decisions to release weapons,” Kratos Defense President of Unmanned Systems Division, Steve Finley, told Decrypt. “AI substantially accelerates data collection and analysis to form decisions and conclusions.”

Founded in 1994, San Diego-based Kratos Defense has partnered extensively with the U.S. military, particularly the Air Force and Marines, to develop advanced unmanned systems like the Valkyrie fighter jet. According to Finley, keeping humans in the decision-making loop is critical to preventing the feared “Terminator” scenario from taking place.

“If a weapon is involved or a maneuver risks human life, a human decision-maker is always in the loop,” Finley said. “There’s always a safeguard—a ‘stop’ or ‘hold’—for any weapon release or critical maneuver.”

Despite how far generative AI has come since the launch of ChatGPT, experts, including author and scientist Gary Marcus, say current limitations of AI models put the real effectiveness of the technology in doubt.

“Businesses have found that large language models are not particularly reliable,” Marcus told Decrypt. “They hallucinate, make boneheaded mistakes, and that limits their real applicability. You would not want something that hallucinates to be plotting your military strategy.”

Known for critiquing overhyped AI claims, Marcus is a cognitive scientist, AI researcher, and author of six books on artificial intelligence. In regards to the dreaded “Terminator” scenario, and echoing Kratos Defense’s executive, Marcus also emphasized that fully autonomous robots powered by AI would be a mistake.

“It would be stupid to hook them up for warfare without humans in the loop, especially considering their current clear lack of reliability,” Marcus said. “It concerns me that many people have been seduced by these kinds of AI systems and not come to grips with the reality of their reliability.”

As Marcus explained, many in the AI field hold the belief that simply feeding AI systems more data and computational power would continually enhance their capabilities—a notion he described as a “fantasy.”

“In the last weeks, there have been rumors from multiple companies that the so-called scaling laws have run out, and there’s a period of diminishing returns,” Marcus added. “So I don’t think the military should realistically expect that all these problems are going to be solved. These systems probably aren’t going to be reliable, and you don’t want to be using unreliable systems in war.”

Edited by Josh Quittner and Sebastian Sinclair

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.

Source link

artificial intelligence

AI Startup Hugging Face is Building Small LMs for ‘Next Stage Robotics’

Published

1 week agoon

November 12, 2024By

admin

AI startup Hugging Face envisions that small—not large—language models will be used for applications including “next stage robotics,” its Co-Founder and Chief Science Officer Thomas Wolf said.

“We want to deploy models in robots that are smarter, so we can start having robots that are not only on assembly lines, but also in the wild,” Wolf said while speaking at Web Summit in Lisbon today. But that goal, he said, requires low latency. “You cannot wait two seconds so that your robots understand what’s happening, and the only way we can do that is through a small language model,” Wolf added.

Small language models “can do a lot of the tasks we thought only large models could do,” Wolf said, adding that they can also be deployed on-device. “If you think about this kind of game changer, you can have them running on your laptop,” he said. “You can have them running even on your smartphone in the future.”

Ultimately, he envisions small language models running “in almost every tool or appliance that we have, just like today, our fridge is connected to the internet.”

The firm released its SmolLM language model earlier this year. “We are not the only one,” said Wolf, adding that, “Almost every open source company has been releasing smaller and smaller models this year.”

He explained that, “For a lot of very interesting tasks that we need that we could automate with AI, we don’t need to have a model that can solve the Riemann conjecture or general relativity.” Instead, simple tasks such as data wrangling, image processing and speech can be performed using small language models, with corresponding benefits in speed.

The performance of Hugging Face’s LLaMA 1b model to 1 billion parameters this year is “equivalent, if not better than, the performance of a 10 billion parameters model of last year,” he said. “So you have a 10 times smaller model that can reach roughly similar performance.”

“A lot of the knowledge we discovered for our large language model can actually be translated to smaller models,” Wolf said. He explained that the firm trains them on “very specific data sets” that are “slightly simpler, with some form of adaptation that’s tailored for this model.”

Those adaptations include “very tiny, tiny neural nets that you put inside the small model,” he said. “And you have an even smaller model that you add into it and that specializes,” a process he likened to “putting a hat for a specific task that you’re gonna do. I put my cooking hat on, and I’m a cook.”

In the future, Wolf said, the AI space will split across two main trends.

“On the one hand, we’ll have this huge frontier model that will keep getting bigger, because the ultimate goal is to do things that human cannot do, like new scientific discoveries,” using LLMs, he said. The long tail of AI applications will see the technology “embedded a bit everywhere, like we have today with the internet.”

Edited by Stacy Elliott.

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.

Source link

Jason "Spaceboi" Lowery's Bitcoin "Thesis" Is Incoherent Gibberish

Bankrupt Crypto Exchange FTX Set To Begin Paying Creditors and Customers in Early 2025, Says CEO

Top crypto traders’ picks for explosive growth by 2025

3 Tokens Ready to 100x After XRP ETF Gets Approval

Gary Gensler’s Departure Is No Triumph For Bitcoin

Magic Eden Token Airdrop Date Set as Pre-Market Value Hits $562 Million

Blockchain Association urges Trump to prioritize crypto during first 100 days

Pi Network Coin Price Surges As Key Deadline Nears

How Viable Are BitVM Based Pegs?

UK Government to Draft a Regulatory Framework for Crypto, Stablecoins, Staking in Early 2025

Bitcoin Cash eyes 18% rally

Rare Shiba Inu Price Patterns Hint SHIB Could Double Soon

The Bitcoin Pi Cycle Top Indicator: How to Accurately Time Market Cycle Peaks

Bitcoin Breakout At $93,257 Barrier Fuels Bullish Optimism

Bitcoin Approaches $100K; Retail Investors Stay Steady

182267361726451435

Top Crypto News Headlines of The Week

Why Did Trump Change His Mind on Bitcoin?

New U.S. president must bring clarity to crypto regulation, analyst says

Ethereum, Solana touch key levels as Bitcoin spikes

Bitcoin Open-Source Development Takes The Stage In Nashville

Will XRP Price Defend $0.5 Support If SEC Decides to Appeal?

Bitcoin 20% Surge In 3 Weeks Teases Record-Breaking Potential

Ethereum Crash A Buying Opportunity? This Whale Thinks So

Shiba Inu Price Slips 4% as 3500% Burn Rate Surge Fails to Halt Correction

‘Hamster Kombat’ Airdrop Delayed as Pre-Market Trading for Telegram Game Expands

Washington financial watchdog warns of scam involving fake crypto ‘professors’

Citigroup Executive Steps Down To Explore Crypto

Mostbet Güvenilir Mi – Casino Bonus 2024

Bitcoin flashes indicator that often precedes higher prices: CryptoQuant

Trending

2 months ago

2 months ago182267361726451435

24/7 Cryptocurrency News3 months ago

24/7 Cryptocurrency News3 months agoTop Crypto News Headlines of The Week

Donald Trump4 months ago

Donald Trump4 months agoWhy Did Trump Change His Mind on Bitcoin?

News3 months ago

News3 months agoNew U.S. president must bring clarity to crypto regulation, analyst says

Bitcoin4 months ago

Bitcoin4 months agoEthereum, Solana touch key levels as Bitcoin spikes

Opinion4 months ago

Opinion4 months agoBitcoin Open-Source Development Takes The Stage In Nashville

Price analysis3 months ago

Price analysis3 months agoWill XRP Price Defend $0.5 Support If SEC Decides to Appeal?

Bitcoin4 months ago

Bitcoin4 months agoBitcoin 20% Surge In 3 Weeks Teases Record-Breaking Potential