technical

Looks Like Satoshi Nakamoto Left Us With Another Mystery

Published

2 months agoon

By

admin

WHO WE’RE FOLLOWING: Wicked Bitcoin

It’s 2024 and there’s a new mystery surfacing around Bitcoin’s creator Satoshi Nakamoto.

In this case, discussion of a new enigma first surfaced on X, where everyone’s favorite ch-artist Wicked Bitcoin posted the discovery.

Essentially, the finding boils down to this:

- It’s clear that Satoshi Nakamoto was an early Bitcoin miner – after all, he sent bitcoins to early contributors, and since he didn’t set himself up with a sweet “founder’s allocation,” they could have only come from mining.

- That said, we don’t really know how many bitcoins Satoshi mined. (He never commented on it publicly, aside from one reported instance where he claimed to “own a lot” of bitcoins.) Most of what’s “common knowledge” is from one study done in 2013, and while it’s become something like lore, there’s a lot of dispute about what it proves.

- Essentially, the study suggested Satoshi’s mining activity was visible on the blockchain via what’s been called the “Patoshi pattern.” Long story short, an early, very large miner changed the way they embedded data on the blockchain (via a non-standard iteration of the ExtraNonce), and most believe that this could have only been done by Nakamoto (who knew the most about the software in its infancy).

- Jameson Lopp (co-founder of Casa) built on this work in 2022. He added new analysis about this mystery miner, including the finding that they weren’t seeking to maximize their profitability. Some felt this was another strong data point Patoshi was Satoshi.

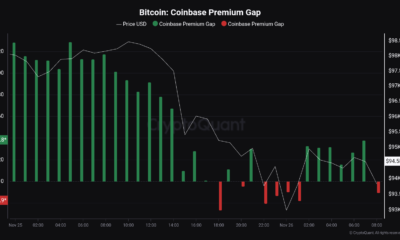

- Now, Wicked is adding to the mystery, one that alludes to earlier “Patoshi” analyses. Essentially, by plotting this miner’s blocks on a date-time axis, he finds that there’s a notable gap in the timestamps of this miner’s blocks in early 2009.

Of course, as to what we can conclude from this data, as Wicked’s comments section shows, that’s up for debate.

Adding to the issue is that here is a dearth of historical information about Bitcoin from 2009. What’s been uncovered amounts to a few public email lists and private correspondences that have been published over the years (some forced by court hearings).

As far back as May-June 2009, there were no Bitcoin forums, and it’s possible there could have been only a dozen people mining the network. Martii Malmi, (Satoshi’s first real righthand developer) would have only just been starting his work.

This means that we don’t really have a concrete timeline or what occurred and why besides what’s visible by looking at the data, and there, there isn’t even that much to discuss – there were many days in 2009 where there weren’t any Bitcoin transactions.

Wicked’s thesis here is that the above gaps show instances where the “Patoshi miner” went offline, and then had to restart operations. At this point, the miner was so powerful that they simply overwrote any blocks found by other miners in their absence.

Wicked draws a few conclusions from this, going so far as to suggest Satoshi may have been testing how well the network held up to “51% attacks.” This would be plausible – after all, the idea that Bitcoin was robust enough to operate as long as a majority of participants were honest was his major contribution to digital cash as a concept.

(Really, you could argue (as I have) that’s the only thing Satoshi brough to Bitcoin that was new, his primary skill taking hardened computer science concepts and stitching them together.)

That said, there’s a bit of a bearish read here. An accidental 51% attack would have still made honest mining moot, and this could be fodder for critics who like to paint Satoshi as the kind of errant experimenter we see on other chains today.

Still, there’s a lot of conjecture here, and without more analysis (or more corroborating evidence) it’s hard to draw a firm conclusion.

At any rate, we can marvel at the mystery that nearly 16 years later, Satoshi has succeeded so well in hiding his tracks from history.

Source link

You may like

US Court Rules Tornado Cash Smart Contracts Not Property, Lifts Ban

Maximizing Bitcoin Accumulation – Beyond the Benchmark

Bitcoin Crashes Under $93,000: What’s Behind It?

Trump in considerations for CFTC to regulate crypto

Will XRP Price Reach $2 By The End Of November?

Here Are Three Promising Altcoins for the Next Crypto Market Bounce, According to Top Trader

Libsecp256k1

Safegcd’s Implementation Formally Verified

Published

1 day agoon

November 25, 2024By

admin

Introduction

The security of Bitcoin, and other blockchains, such as Liquid, hinges on the use of digital signatures algorithms such as ECDSA and Schnorr signatures. A C library called libsecp256k1, named after the elliptic curve that the library operates on, is used by both Bitcoin Core and Liquid, to provide these digital signature algorithms. These algorithms make use of a mathematical computation called a modular inverse, which is a relatively expensive component of the computation.

In “Fast constant-time gcd computation and modular inversion,” Daniel J. Bernstein and Bo-Yin Yang develop a new modular inversion algorithm. In 2021, this algorithm, referred to as “safegcd,” was implemented for libsecp256k1 by Peter Dettman. As part of the vetting process for this novel algorithm, Blockstream Research was the first to complete a formal verification of the algorithm’s design by using the Coq proof assistant to formally verify that the algorithm does indeed terminate with the correct modular inverse result on 256-bit inputs.

The Gap between Algorithm and Implementation

The formalization effort in 2021 only showed that the algorithm designed by Bernstein and Yang works correctly. However, using that algorithm in libsecp256k1 requires implementing the mathematical description of the safegcd algorithm within the C programming language. For example, the mathematical description of the algorithm performs matrix multiplication of vectors that can be as wide as 256 bit signed integers, however the C programming language will only natively provide integers up to 64 bits (or 128 bits with some language extensions).

Implementing the safegcd algorithm requires programming the matrix multiplication and other computations using C’s 64 bit integers. Additionally, many other optimizations have been added to make the implementation fast. In the end, there are four separate implementations of the safegcd algorithm in libsecp256k1: two constant time algorithms for signature generation, one optimized for 32-bit systems and one optimized for 64-bit systems, and two variable time algorithms for signature verification, again one for 32-bit systems and one for 64-bit systems.

Verifiable C

In order to verify the C code correctly implements the safegcd algorithm, all the implementation details must be checked. We use Verifiable C, part of the Verified Software Toolchain for reasoning about C code using the Coq theorem prover.

Verification proceeds by specifying preconditions and postconditions using separation logic for every function undergoing verification. Separation logic is a logic specialized for reasoning about subroutines, memory allocations, concurrency and more.

Once each function is given a specification, verification proceeds by starting from a function’s precondition, and establishing a new invariant after each statement in the body of the function, until finally establishing the post condition at the end of the function body or the end of each return statement. Most of the formalization effort is spent “between” the lines of code, using the invariants to translate the raw operations of each C expression into higher level statements about what the data structures being manipulated represent mathematically. For example, what the C language regards as an array of 64-bit integers may actually be a representation of a 256-bit integer.

The end result is a formal proof, verified by the Coq proof assistant, that libsecp256k1’s 64-bit variable time implementation of the safegcd modular inverse algorithm is functionally correct.

Limitations of the Verification

There are some limitations to the functional correctness proof. The separation logic used in Verifiable C implements what is known as partial correctness. That means it only proves the C code returns with the correct result if it returns, but it doesn’t prove termination itself. We mitigate this limitation by using our previous Coq proof of the bounds on the safegcd algorithm to prove that the loop counter value of the main loop in fact never exceeds 11 iterations.

Another issue is that the C language itself has no formal specification. Instead the Verifiable C project uses the CompCert compiler project to provide a formal specification of a C language. This guarantees that when a verified C program is compiled with the CompCert compiler, the resulting assembly code will meet its specification (subject to the above limitation). However this doesn’t guarantee that the code generated by GCC, clang, or any other compiler will necessarily work. For example, C compilers are allowed to have different evaluation orders for arguments within a function call. And even if the C language had a formal specification any compiler that isn’t itself formally verified could still miscompile programs. This does occur in practice.

Lastly, Verifiable C doesn’t support passing structures, returning structures or assigning structures. While in libsecp256k1, structures are always passed by pointer (which is allowed in Verifiable C), there are a few occasions where structure assignment is used. For the modular inverse correctness proof, there were 3 assignments that had to be replaced by a specialized function call that performs the structure assignment field by field.

Summary

Blockstream Research has formally verified the correctness of libsecp256k1’s modular inverse function. This work provides further evidence that verification of C code is possible in practice. Using a general purpose proof assistant allows us to verify software built upon complex mathematical arguments.

Nothing prevents the rest of the functions implemented in libsecp256k1 from being verified as well. Thus it is possible for libsecp256k1 to obtain the highest possible software correctness guarantees.

This is a guest post by Russell O’Connor and Andrew Poelstra. Opinions expressed are entirely their own and do not necessarily reflect those of BTC Inc or Bitcoin Magazine.

Source link

BitVM earlier this year came under fire due to the large liquidity requirements necessary in order for a rollup (or other system operator) to process withdrawals for the two way peg mechanisms being built using the BitVM design. Galaxy, an investor in Citrea, has performed an economic analysis looking at their assumptions regarding economic conditions necessary to make a BitVM based two way peg a sustainable operation.

For those unfamiliar, pegging into a BitVM system requires the operators to take custody of user funds in an n-of-n multisig, creating a set of pre-signed transactions allowing the operator facilitating withdrawals to claim funds back after a challenge period. The user is then issued backed tokens on the rollup or other second layer system.

Pegouts are slightly more complicated. The user must burn their funds on the second layer system, and then craft a Partially Signed Bitcoin Transaction (PSBT) paying them funds back out on the mainchain, minus a fee to the operator processing withdrawals. They can keep crafting new PSBTs paying the operator higher fees until the operator accepts. At this point the operator will take their own liquidity and pay out the user’s withdrawal.

The operator can then, after having processed withdrawals adding up to a deposited UTXO, initiate the withdrawal out of the BitVM system to make themselves whole. This includes a challenge-response period to protect against fraud, which Galaxy models as a 14 day window. During this time period anyone who can construct a fraud proof showing that the operator did not honestly honor the withdrawals of all users in that epoch can initiate the challenge. If the operator cannot produce a proof they correctly processed all withdrawals, then the connector input (a special transaction input that is required to use their pre-signed transactions) the operator uses to claim their funds back can be burned, locking them out of the ability to recuperate their funds.

Now that we’ve gotten through a mechanism refresher, let’s look at what Galaxy modeled: the economic viability of operating such a peg.

There are a number of variables that must be considered when looking at whether this system can be operated profitably. Transaction fees, amount of liquidity available, but most importantly the opportunity cost of devoting capital to processing withdrawals from a BitVM peg. This last one is of critical importance in being able to source liquidity to manage the peg in the first place. If liquidity providers (LPs) can earn more money doing something else with their money, then they are essentially losing money by using their capital to operate a BitVM system.

All of these factors have to be covered, profitably, by the aggregate of fees users will pay to peg out of the system for it to make sense to operate. I.e. to generate a profit. The two references for competing interest rates Galaxy looked at were Aave, a DeFi protocol operating on Ethereum, and OTC markets in Bitcoin.

Aave at the time of their report earned lenders approximately 1% interest on WBTC (Wrapped Bitcoin pegged into Ethereum) lent out. OTC lending on the other hand had rates as high as 7.6% compared to Aave. This shows a stark difference between the expected return on capital between DeFi users and institutional investors. Users of a BitVM system must generate revenue in excess of these interest rates in order to attract capital to the peg from these other systems.

By Galaxy’s projections, as long as LPs are targeting a 10% Annual Percentage Yield (APY), that should cost individual users -0.38% in a peg out transaction. The only wildcard variable, so to say, is the transaction fees that the operator has to pay during high fee environments. The users funds are already reclaimed using the operators liquidity instantly after initiating the pegout, while the operator has to wait the two week challenge period in order to claim back the fronted liquidity.

If fees were to spike in the meanwhile, this would eat into the operators profit margins when they eventually claim their funds back from the BitVM peg. However, in theory operators could simply wait until fees subside to initiate the challenge period and claim their funds back.

Overall the viability of a BitVM peg comes down to being able to generate a high enough yield on liquidity used to process withdrawals to attract the needed capital. To attract more institutional capital, these yields must be higher in order to compete with OTC markets.

The full Galaxy report can be read here.

Source link

ColliderScript

ColliderScript: A $50M Bitcoin Covenant With No New Opcodes

Published

2 weeks agoon

November 15, 2024By

admin

While the last year or two have seen a number of proposals for covenant-proposing extensions to Bitcoin, there has always been a suspicion among experts that covenants may be possible without any extensions. Evidence for this has come in two forms: an expanding repertoire of previously-thought-impossible computations in Script (culminating in the BitVM’s project to implement every RISC-V opcode), and a series of “near-misses” by which Bitcoin developers have found ways that covenants would have been possible, if not for some obscure historical quirk of the system.

Ethan Heilman, Avihu Levy, Victor Kobolov and I have developed a scheme which proves this suspicion was well founded. Our scheme, ColliderScript, enables covenants on Bitcoin today, under fairly reasonable cryptographic assumptions and at a probable cost around 50 million dollars per transaction (plus some hardware R&D).

Despite the outlandish costs to use ColliderScript, setting it up is very cheap, and doing so (alongside an ordinary spending mechanism, using Taproot to separate the two) just might save your coins in case a quantum computer shows up out of nowhere and blows up the system.

No doubt many readers, after reading these claims, are raising one eyebrow to the sky. By the time you are done reading this article, the other one will be just as high.

Covenants

The context of this discussion, for those unfamiliar, is that Bitcoin has a built-in programming language, called Bitcoin Script, which is used to authorize the spending of coins. In its earliest days, Script contained a rich set of arithmetic opcodes which could be used to implement arbitrary computations. But in the summer of 2010, Satoshi disabled many of these in order to quash a series of serious bugs. (Returning to the pre-2010 version of Script is the goal of the Great Script Restoration Project; OP_CAT is a less ambitious proposal in the same direction.) The idea of covenants — transactions which use Script to control the quantity and destination of their coins — didn’t appear for several more years, and the realization that these opcodes would’ve been sufficient to implement covenants didn’t come until even later. By that point, the community was too large and cautious to simply “re-enable” the old opcodes in the same way that they’d been disabled.

Covenants are hypothetical Script constructions that would allow users to control not only the conditions under which coins are spent, but also their destination. This is the basis for many would-be constructions on Bitcoin, from vaults and rate-limited wallets, to new fee-market mechanisms like payment pools, to less-savory constructions like distributed finance and MEV. Millions of words have been spent debating the desirability of covenants and what they would do to the nature of Bitcoin.

In this article I will sidestep this debate, and argue simply that covenants are possible on Bitcoin already; that we will eventually discover how they are possible (without great computational cost or questionable cryptographic assumptions); and that our debate about new extensions to Bitcoin shouldn’t be framed as though individual changes will be the dividing line between a covenant-less or covenant-ful future for Bitcoin.

History

Over the years, a tradition developed of finding creative ways to do non-trivial things even with a limited Script. The Lightning Network was one instance of this, as were less widely-known ideas like probabilistic payments or collision bounties for hash functions. Obscure edge cases, like the SIGHASH_SINGLE bug or the use of public key recovery to obtain a “transaction hash” within the Script interpreter, were noticed and explored, but nobody ever found a way to make them useful. Meanwhile, Bitcoin itself evolved to be more tightly-defined, closing many of these doors. For example, Segwit eliminated the SIGHASH_SINGLE bug and explicitly separated program data from witness data; Taproot got rid of public key recovery, which had provided flexibility at the cost of potentially undermining security for adaptor signatures or multisignatures.

Despite these changes, Script hacking continued, as did the belief among die-hards that somehow, some edge-case might be found that would enable covenant support in Bitcoin. In the early 2020s, two developments in particular made waves. One was my own discovery that signature-based covenants hadn’t died with public key recovery, and that in particular, if we had even a single disabled opcode back — OP_CAT — this would be enough for a fairly efficient covenant construction. The other was BitVM, a novel way to do large computations in Script across multiple transactions, which inspired a tremendous amount of research into basic computations within single transactions.

These two developments inspired a lot of activity and excitement around covenants, but they also crystallized our thinking about the fundamental limitations of Script. In particular, it se

emed as though covenants might be impossible without new opcodes, since transaction data was only ever fed into Script through 64-byte signatures and 32-byte public keys, while the opcodes supporting BitVM could only work with 4-byte objects. This divide was termed “Small Script” and “Big Script”, and finding a bridge between the two became synonymous (in my mind, at least) with finding a covenant construction.

Functional Encryption and PIPEs

It was also observed that, with a bit of moon math, it might be possible to do covenants entirely within signatures themselves, without ever leaving Big Script. This idea was articulated by Jeremy Rubin in his paper FE’d Up Covenants, which described how to implement covenants using a hypothetical crypto primitive called functional encryption. Months later, Misha Komorov proposed a specific scheme called PIPEs which appears to make this hypothetical idea a reality.

This is an exciting development, though it suffers from two major limitations: one is that it involves a trusted setup, meaning that the person who creates the covenant is able to bypass its rules. (This is fine for something like vaults, in which the owner of the coins can be trusted to not undermine his own security; but it is not fine for something like payment pools where the coins in the covenant are not owned by the covenant’s creator.) The other limitation is that it involves cutting-edge cryptography with unclear security properties. This latter limitation will fade away with more research, but the trusted setup is inherent to the functional-encryption approach.

ColliderScript

This overview brings us to the current situation: we would like to find a way to implement covenants using the existing form of Bitcoin Script, and we believe that the way to do this is to find some sort of bridge between the “Big Script” of transaction signatures and the “Small Script” of arbitrary computations. It appears that no opcodes can directly form this bridge (see Appendix A in our paper for a classification of all opcodes in terms of their input and output size). A bridge, if one existed, would be some sort of construction that took a single large object and demonstrated that it was exactly equal to the concatenation of several small objects. It appears, based on our classification of opcodes, that this is impossible.

However, in cryptography we often weaken notions like “exactly equal”, instead using notions like “computationally indistinguishable” or “statistically indistinguishable”, and thereby evade impossibility results. Maybe, by using the built-in cryptographic constructs of Big Script — hashes and elliptic curve signatures — and by mirroring them using BitVM constructions in Small Script, we could find a way to show that a large object was “computationally indistinguishable” from a series of small ones? With ColliderScript, this is exactly what we did.

What does this mean? Well, recall the hash function collision bounty that we mentioned earlier. The premise of this bounty is that anybody who can “collide” a hash function, by providing two inputs that have the same hash output, can prove in Big Script that they did so, and thereby claim the bounty. Since the input space of a hash function is much bigger (all bytestrings of up to 520 bytes in size) than the output space (bytestrings of exactly 32 bytes in size), mathematically speaking there must be many many such collisions. And yet, with the exception of SHA1, nobody has found a faster way to find these collisions than by just calling the hash function over and over and seeing if the result matches that of an earlier attempt.

This means that, on average, for a 160-bit hash function like SHA1 or RIPEMD160, a user will need to do at least 2^80 work, or a million million million million iterations, to find a collision. (In the case of SHA1, there is a shortcut if the user is able to use inputs of a particular form; but our construction forbids these so for our purposes we can ignore this attack.) This assumes that the user has an effectively infinite amount of memory to work with; with more realistic assumptions, we need to add another factor of one hundred or so.

If we imagine that SHA1 and RIPEMD160 can be computed as efficiently as Bitcoin ASICs compute SHA256, then the cost of such a computation would be about the same as 200 blocks, or around 625 BTC (46 million dollars). This is a lot of money, but many people have access to such a sum, so this is possible.

To find a triple collision, or three inputs that evaluate to the same thing, would take about 2^110 work, even with very generous assumptions about access to memory. To get this number, we need to add another factor of 16 million to our cost — bringing our total to over 700 trillion dollars. This is also a lot of money, and one which nobody has access to today.

The crux of our construction is as follows: to prove that a series of small objects is equivalent to a single large object, we first find a hash collision between our target object (which we assume can be rerandomized somehow, or else we’d be doing a “second-preimage search” rather than a collision search, which would be much much harder) and an “equivalence tester object”. These equivalence tester objects are constructed in a way that they can be easily manipulated both in Big Script and Small Script.

Our construction then checks, in Bitcoin Script, both that our large object collides with our equivalence tester (using exactly the same methods as in the hash-collision bounty) and that our series of small objects collides with the equivalence tester (using complex constructions partially cribbed from the BitVM project, and described in detail in the paper). If these checks pass, then either our small and big objects were the same, or the user found a triple-collision: two different objects which both collide with the tester. By our argument above, this is impossible.

Conclusion

Bridging Small Script and Big Script is the hardest part of our covenant construction. To go from this bridge to an actual covenant, there are a few more steps, which are comparatively easy. In particular, a covenant script first asks the user to sign the transaction using the special “generator key”, which we can verify using the OP_CHECKSIG opcode. Using the bridge, we break this signature into 4-byte chunks. We then verify that its nonce was also equal to the generator key, which is easy to do once the signature has been broken up. Finally, we use techniques from the Schnorr trick to extract transaction data from the signature, which can then be constrained in whatever way the covenant wants.

There are a few other things we can do: Appendix C describes a ring signature construction that would allow coins to be signed by one of a set of public keys, without revealing which one was used. In this case, we use the bridge to break up the public key, rather than the signature. Doing so gives us a significant efficiency improvement relative to the covenant construction, for technical reasons related to Taproot and detailed in the paper.

A final application that I want to draw attention to, discussed briefly in Section 7.2 of the paper, is that we can use our covenant construction to pull the transaction hash out of a Schnorr signature, and then simply re-sign the hash using a Lamport signature.

Why would we do this? As argued in the above link, Lamport-signing the signature this way makes it a quantum-secure signature on the transaction data; if this construction were the only way to sign for some coins, they would be immune from theft by a quantum computer.

Of course, since our construction requires tens of millions of dollars to use, nobody would make this construction the only way to sign for their coins. But there’s nothing stopping somebody from adding this construction to their coins, in addition to their existing non-quantum-secure methods of spending.

Then, if we woke up tomorrow to find that cheap quantum computers existed which were able to break Bitcoin signatures, we might propose an emergency soft-fork which disabled all elliptic curve signatures, including both Taproot key-spends and the OP_CHECKSIG opcode. This would effectively freeze everybody’s coins; but if the alternative were that everybody’s coins were freely stealable, maybe it wouldn’t make any difference. If this signature-disabling soft-fork were to allow OP_CHECKSIG opcode when called with the generator key (such signatures provide no security anyway, and are only useful as a building block for complex Script constructions such as ours), then users of our Lamport-signature construction could continue to freely spend their coins, without fear of seizure or theft.

Of course, they would need to spend tens of millions of dollars to do so, but this is much better than “impossible”! And we expect and hope to see this cost drop dramatically, as people build on our research.

This is a guest post by Andrew Poelstra. Opinions expressed are entirely their own and do not necessarily reflect those of BTC Inc or Bitcoin Magazine.

Source link

US Court Rules Tornado Cash Smart Contracts Not Property, Lifts Ban

Maximizing Bitcoin Accumulation – Beyond the Benchmark

Bitcoin Crashes Under $93,000: What’s Behind It?

Trump in considerations for CFTC to regulate crypto

Will XRP Price Reach $2 By The End Of November?

Here Are Three Promising Altcoins for the Next Crypto Market Bounce, According to Top Trader

Bitcoin Script: Focus On The Building Blocks, Not The Wild Geese

Kraken to close NFT marketplace by February 2025

Kraken To Shut Down Its NFT Marketplace

Pump.fun Accounted for 62% of Solana DEX Transactions in November, So Far

Fidelity Investments Director Shares Bitcoin’s Adoption and Valuation Models

Zodia Custody teams up with Securitize for institutional access to tokenized assets

Crypto Analyst Explains Why Dogecoin Price Will Hit $1

Bitcoin Long-Term Holders Have 163K More BTC to Sell, History Indicates: Van Straten

The transformative potential of Bitcoin in the job market

182267361726451435

Why Did Trump Change His Mind on Bitcoin?

Top Crypto News Headlines of The Week

New U.S. president must bring clarity to crypto regulation, analyst says

Ethereum, Solana touch key levels as Bitcoin spikes

Bitcoin Open-Source Development Takes The Stage In Nashville

Will XRP Price Defend $0.5 Support If SEC Decides to Appeal?

Bitcoin 20% Surge In 3 Weeks Teases Record-Breaking Potential

Ethereum Crash A Buying Opportunity? This Whale Thinks So

Shiba Inu Price Slips 4% as 3500% Burn Rate Surge Fails to Halt Correction

‘Hamster Kombat’ Airdrop Delayed as Pre-Market Trading for Telegram Game Expands

Washington financial watchdog warns of scam involving fake crypto ‘professors’

Citigroup Executive Steps Down To Explore Crypto

Mostbet Güvenilir Mi – Casino Bonus 2024

Bitcoin flashes indicator that often precedes higher prices: CryptoQuant

Trending

2 months ago

2 months ago182267361726451435

Donald Trump4 months ago

Donald Trump4 months agoWhy Did Trump Change His Mind on Bitcoin?

24/7 Cryptocurrency News3 months ago

24/7 Cryptocurrency News3 months agoTop Crypto News Headlines of The Week

News3 months ago

News3 months agoNew U.S. president must bring clarity to crypto regulation, analyst says

Bitcoin4 months ago

Bitcoin4 months agoEthereum, Solana touch key levels as Bitcoin spikes

Opinion4 months ago

Opinion4 months agoBitcoin Open-Source Development Takes The Stage In Nashville

Price analysis4 months ago

Price analysis4 months agoWill XRP Price Defend $0.5 Support If SEC Decides to Appeal?

Bitcoin4 months ago

Bitcoin4 months agoBitcoin 20% Surge In 3 Weeks Teases Record-Breaking Potential