artificial intelligence

How To Create Hyper-Realistic AI Images with Stable Diffusion

Published

4 weeks agoon

By

admin

Are you ready to blur the line between reality and AI-generated art?

If you follow the generative AI space, and image generation in particular, you’re likely familiar with Stable Diffusion. This open-source AI platform has ignited a creative revolution, empowering artists and enthusiasts alike to explore the realms of human creativity—all on their own computers, for free.

With any simple prompt, you can get a picturesque landscape, a fantasy illustration, a 3D creature or a cartoon. But the real eye-popping capabilities are in the ability of these tools to create stunningly realistic imagery.

To do so requires some finesse, however, and some attention to detail that generalistic models sometimes lack. Some avid users can quickly tell when an image is generated with MidJourney or Dall-e just by looking at it. But when it comes to creating images that fool the human brain, Stable Diffusion’s versatility is unbeaten.

From the meticulous handling of color and composition to the uncanny ability to convey human emotion and expression, some custom models are redefining what’s possible in the world of generative AI. Here are some specialized models that we think are la crème de la crème of hyper-realistic image generation with Stable Diffusion.

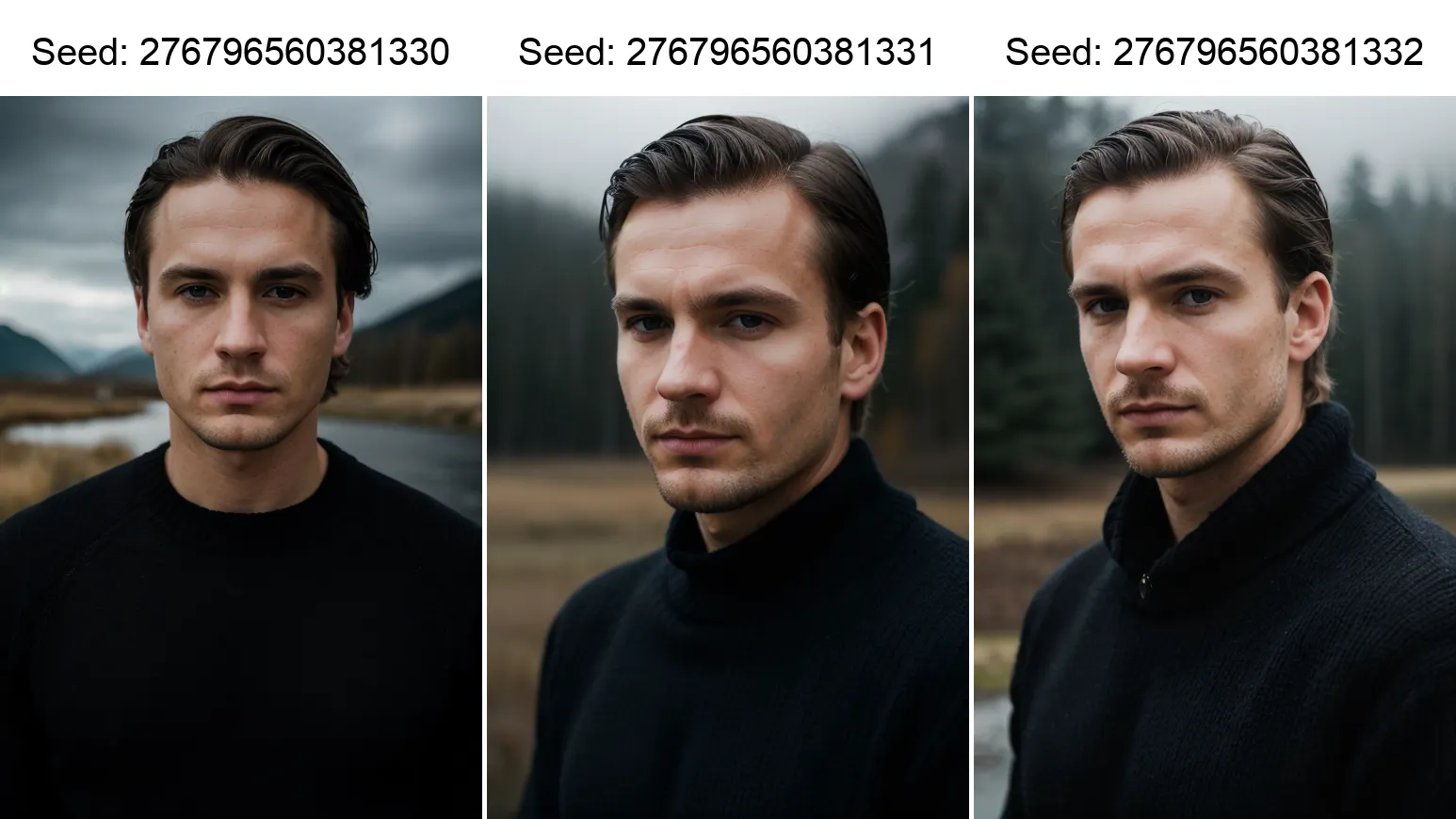

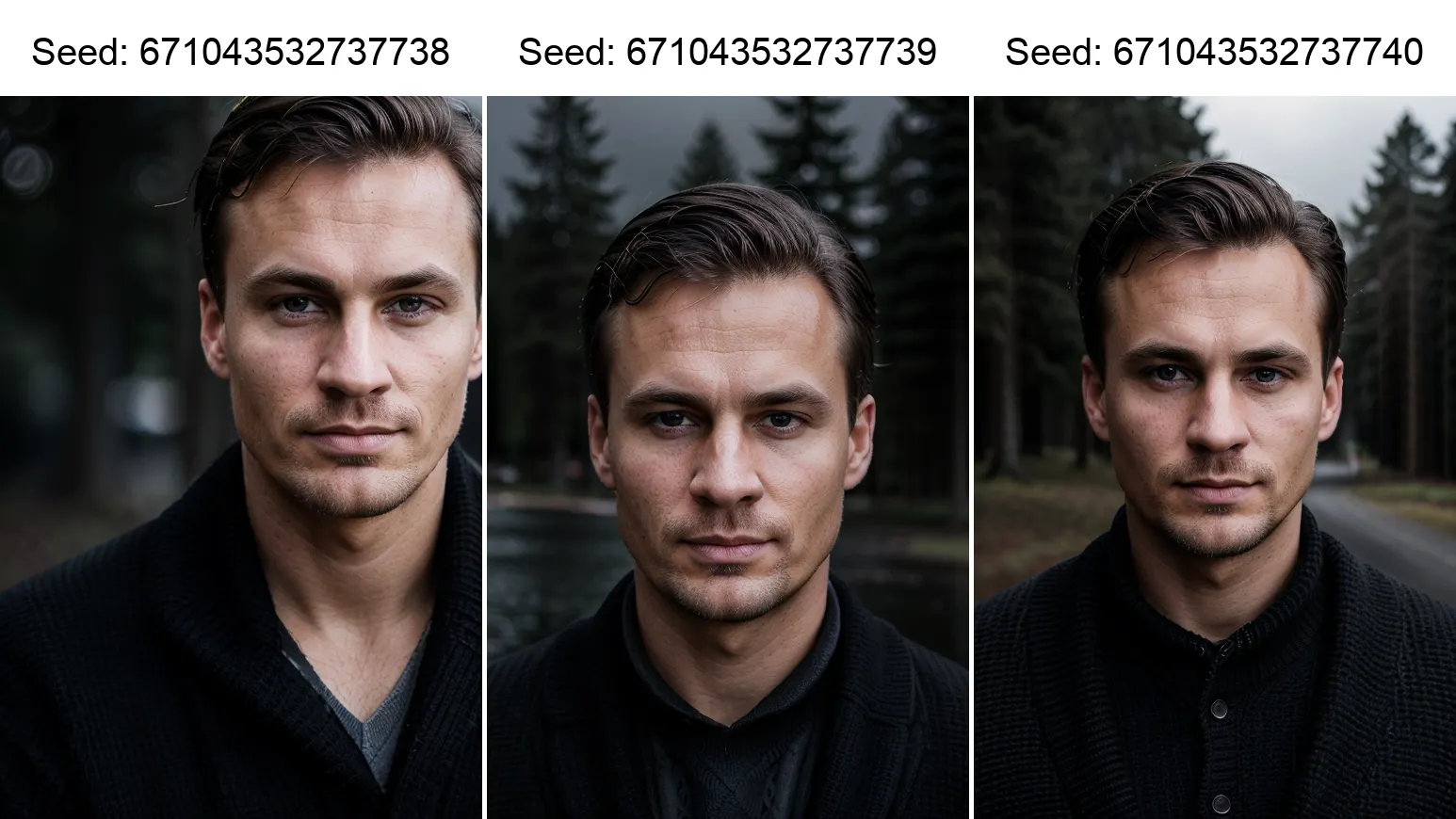

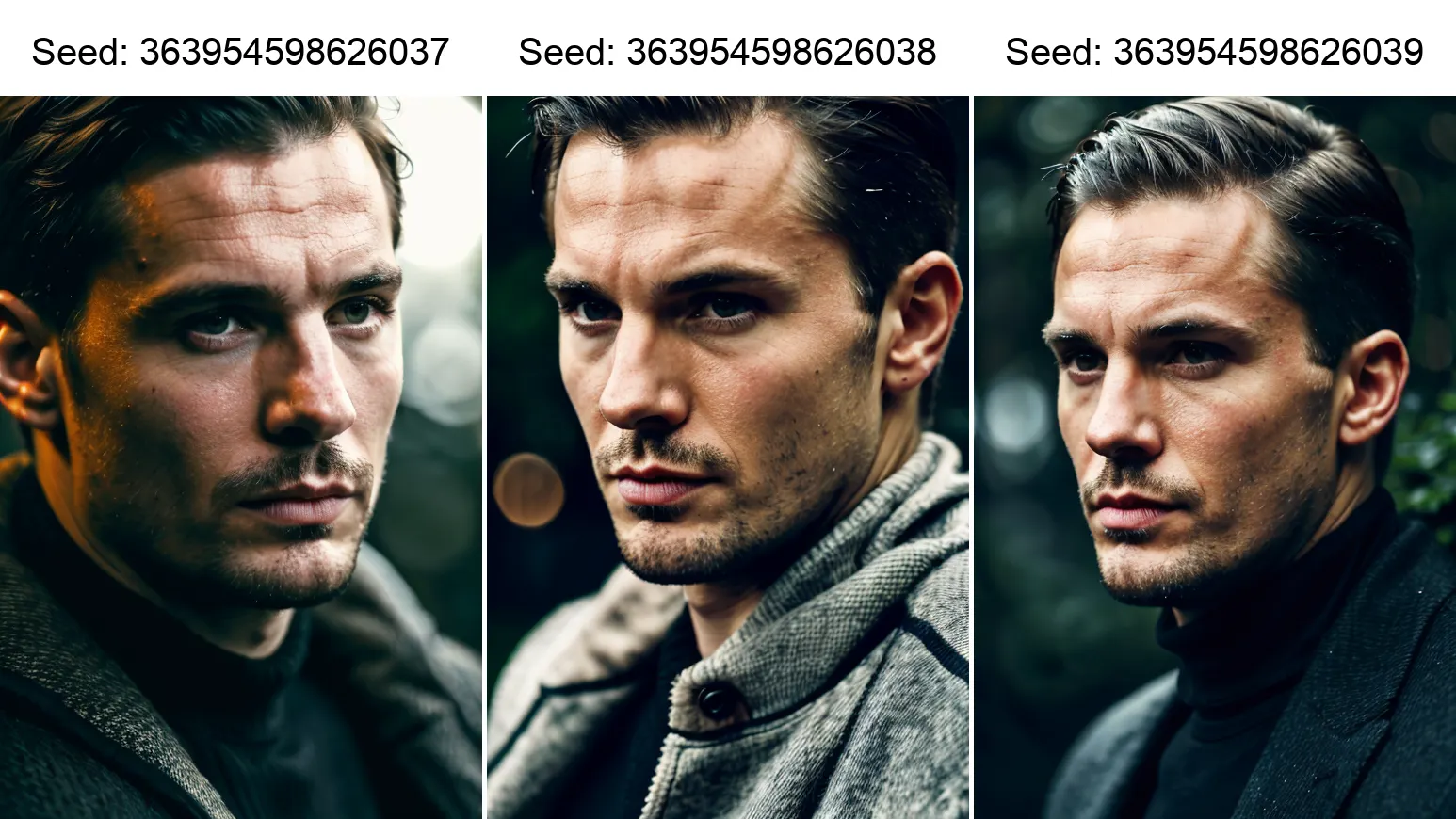

We used the same prompt with all of our models and avoided using LoRas—Low-Rank Adaptation add-on modifiers—to be more fair in our comparisons. Our results were based on prompting and text embeddings. We also used incremental changes to test small variations in our generations.

The prompts

Our positive prompt was: professional photo, closeup portrait photo of caucasian man, wearing a black sweater, serious face, dramatic lighting, nature, gloomy, cloudy weather, bokeh

Our negative prompt (instructing Stable Diffusion on what not to generate) was: embedding:BadDream, embedding:UnrealisticDream, embedding:FastNegativeV2, embedding:JuggernautNegative-neg, (deformed iris, deformed pupils, semi-realistic, cgi, 3d, render, sketch, cartoon, drawing, anime:1.4), text, cropped, out of frame, worst quality, low quality, jpeg artifacts, ugly, duplicate, morbid, mutilated, extra fingers, mutated hands, poorly drawn hands, poorly drawn face, mutation, deformed, blurry, dehydrated, bad anatomy, bad proportions, extra limbs, cloned face, disfigured, gross proportions, malformed limbs, missing arms, missing legs, extra arms, extra legs, fused fingers, too many fingers, long neck, embedding:negative_hand-neg.

All of the resources used will be listed at the end of this article.

Stable Diffusion 1.5: the AI veteran that’s aging with grace

Stable Diffusion 1.5 is like a good old American muscle car that beat fancier, latest-model cars in a drag race. Developers have been messing around with SD1.5 for so long that it effectively buried Stable Diffusion 2.1 in the ground. In fact, a lot of users today still prefer this version over SDXL, which is two generations newer.

When it comes to creating images that are virtually indistinguishable from real-life photos, these models are your new best friends.

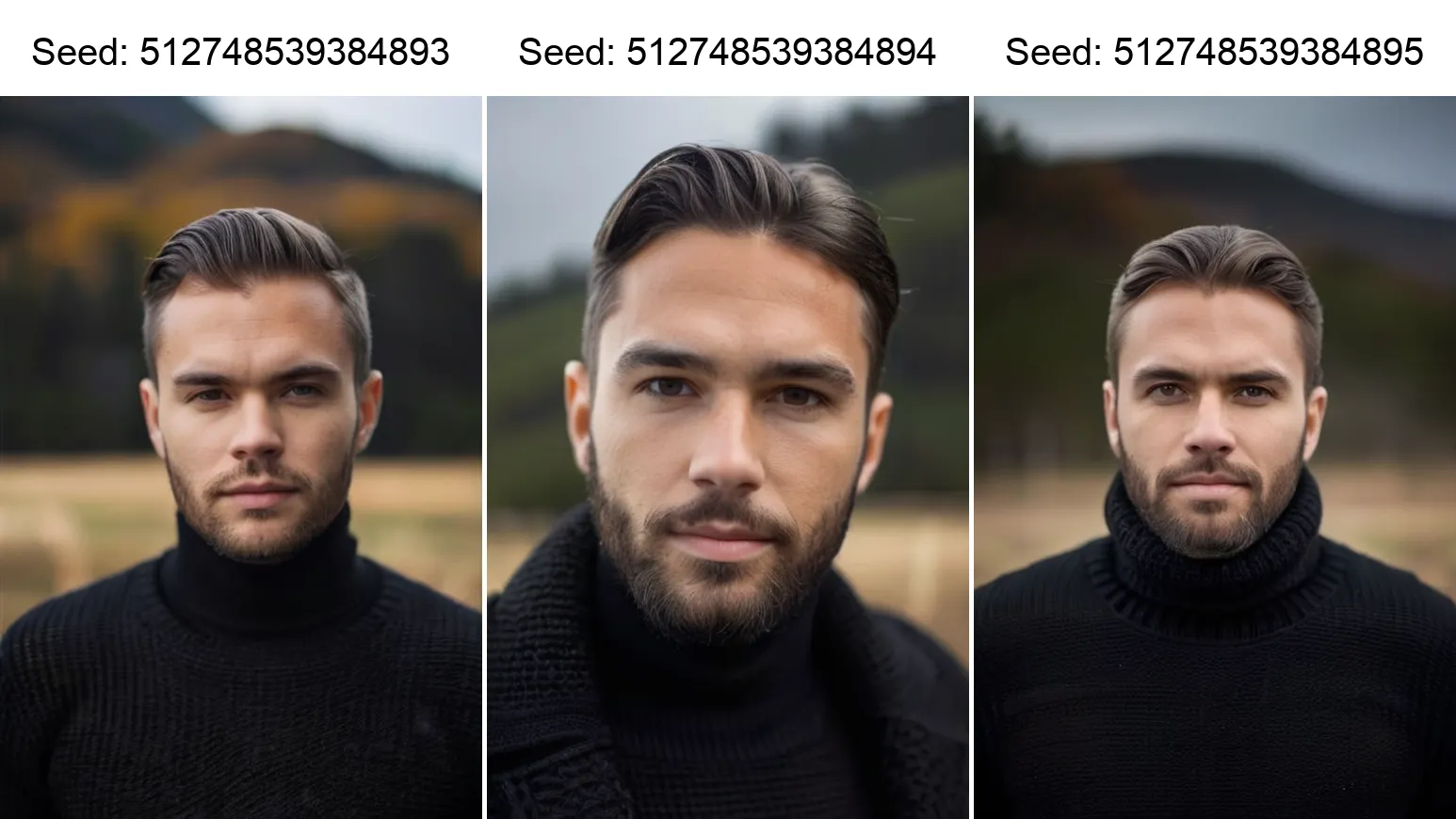

1. Juggernaut Rborn

Juggernaut Rborn is a fan-favorite model is known for its realistic color composition and impressive ability to differentiate between subjects and backgrounds. This model is particularly good at generating high-quality skin details, hair, and bokeh effects in portraits.

The latest version has been fine-tuned to deliver even more compelling results. Juggernaut has always offered color compositions that tend to be more realistic than the saturated, unnatural colors of many other Stable Diffusion models. Its generations tend to be warmer, more washed out, similar to an unedited RAW photo.

Getting the best results will still require some tweaking: use the DPM++ 2M Karras sampler, set to around 35 steps, and an average CFG scale of 7.

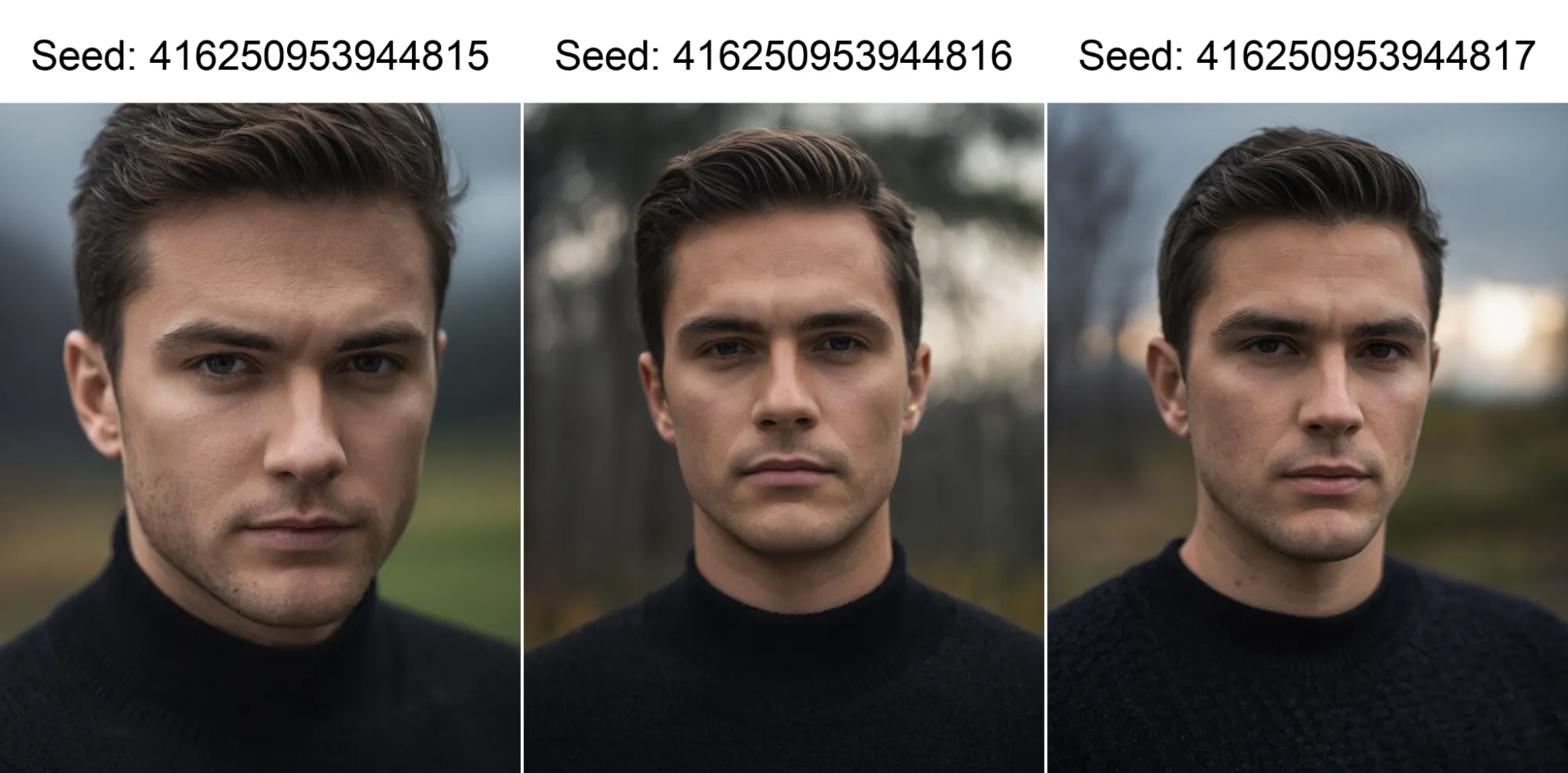

2. Realistic Vision v5.1

A true trailblazer in the realm of photorealistic image generation, Realistic Vision v5.1 brought a pivotal moment in the evolution of Stable Diffusion, enabling it to compete against MidJourney and any other model in terms of photorealism. The v5.1 iteration excels at capturing facial expressions and imperfections, making it a top choice for portrait enthusiasts. It also handles emotions well and focuses more on the subject than the background, ensuring the final result is always realistic. This model is a popular choice thanks to its impressive performance and versatility.

There is a newer version (v6.0), but we like V5.1 more because we feel it is still better in the little details that matter in realistic images. Things like skin, hair, or nails tend to be more convincing in 5.1, but other than that, results are similar, and the improvements seem incremental.

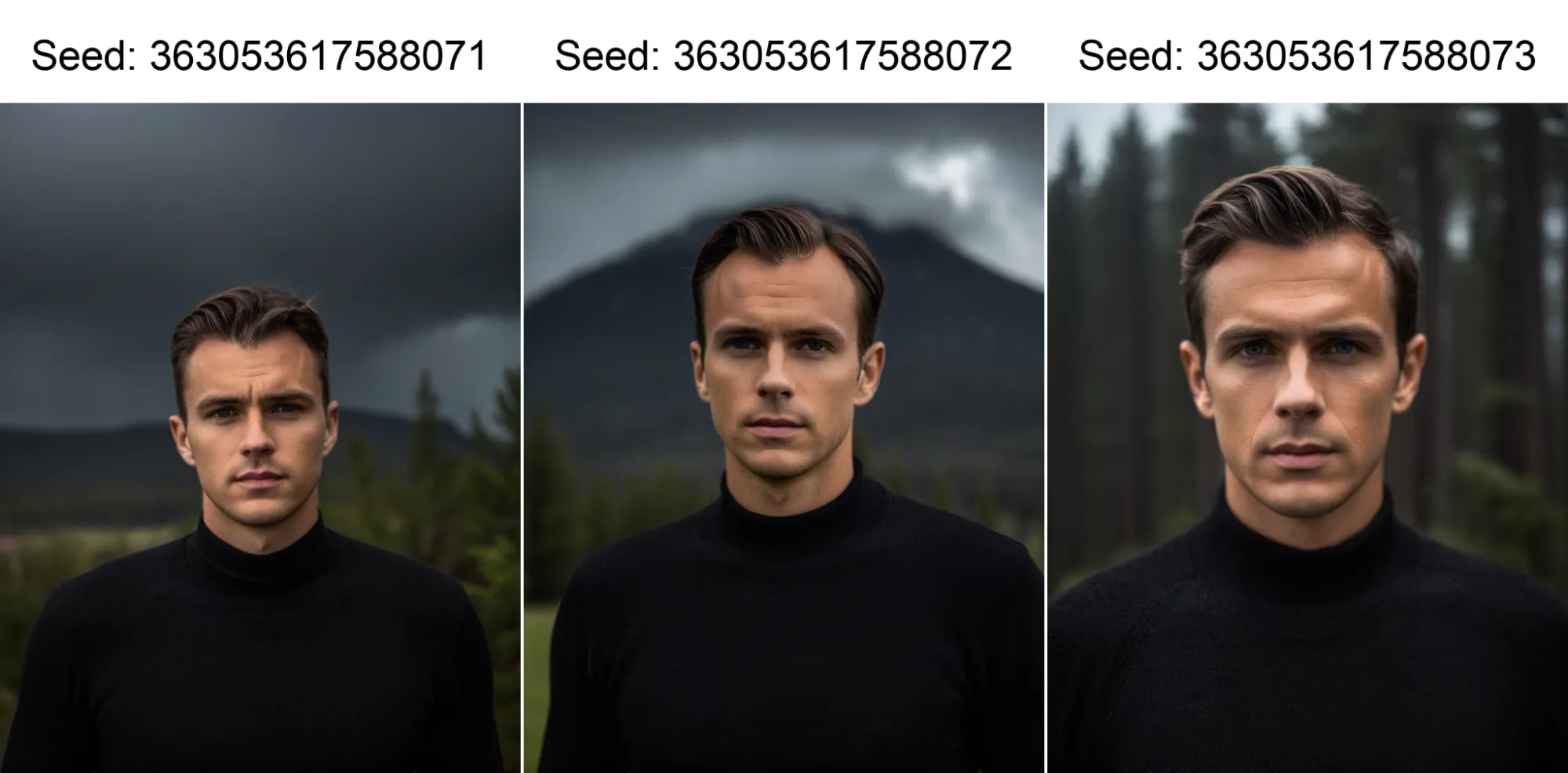

3. I Can’t Believe It’s Not Photography

With its versatility and impressive lighting effects, the cheekily named I Can’t Believe It’s Not Photography model is a great all-around option for hyper-realistic image generation. It is very creative, handles different angles well, and can be used for a variety of subjects, not just people.

This model is particularly good at 640×960 resolution —which is higher than original SD1.5— but can also deliver great results at 768×1152 which is a level of resolution native to SDXL.

For optimal results, use the DPM++ 3M SDE Karras or DPM++ 2M Karras sampler, 20-30 steps, and a 2.5-5 CFG scale (which is lower than usual).

Honorable Mentions:

Photon V1: This versatile model excels in producing realistic results for a wide range of subjects, including people.

Realistic Stock Photo: If you want to generate people with the polished and perfected look of stock photos, this model is an excellent choice. It creates convincing and accurate images without any skin imperfections.

aZovya Photoreal: Although not as well-known, this model produces impressive results and can enhance the performance of other models when merged with their training recipes.

Stable Diffusion XL: The Versatile Visionaries

While Stable Diffusion 1.5 is our top pick for photorealistic images, Stable Diffusion XL offers more versatility and high-quality results without resorting to tricks like upscaling. It requires a little bit of power, but can be run with GPUs with 6GB of vRAM—2GB less than SD1.5 requires.

Here are the models that are leading the charge.

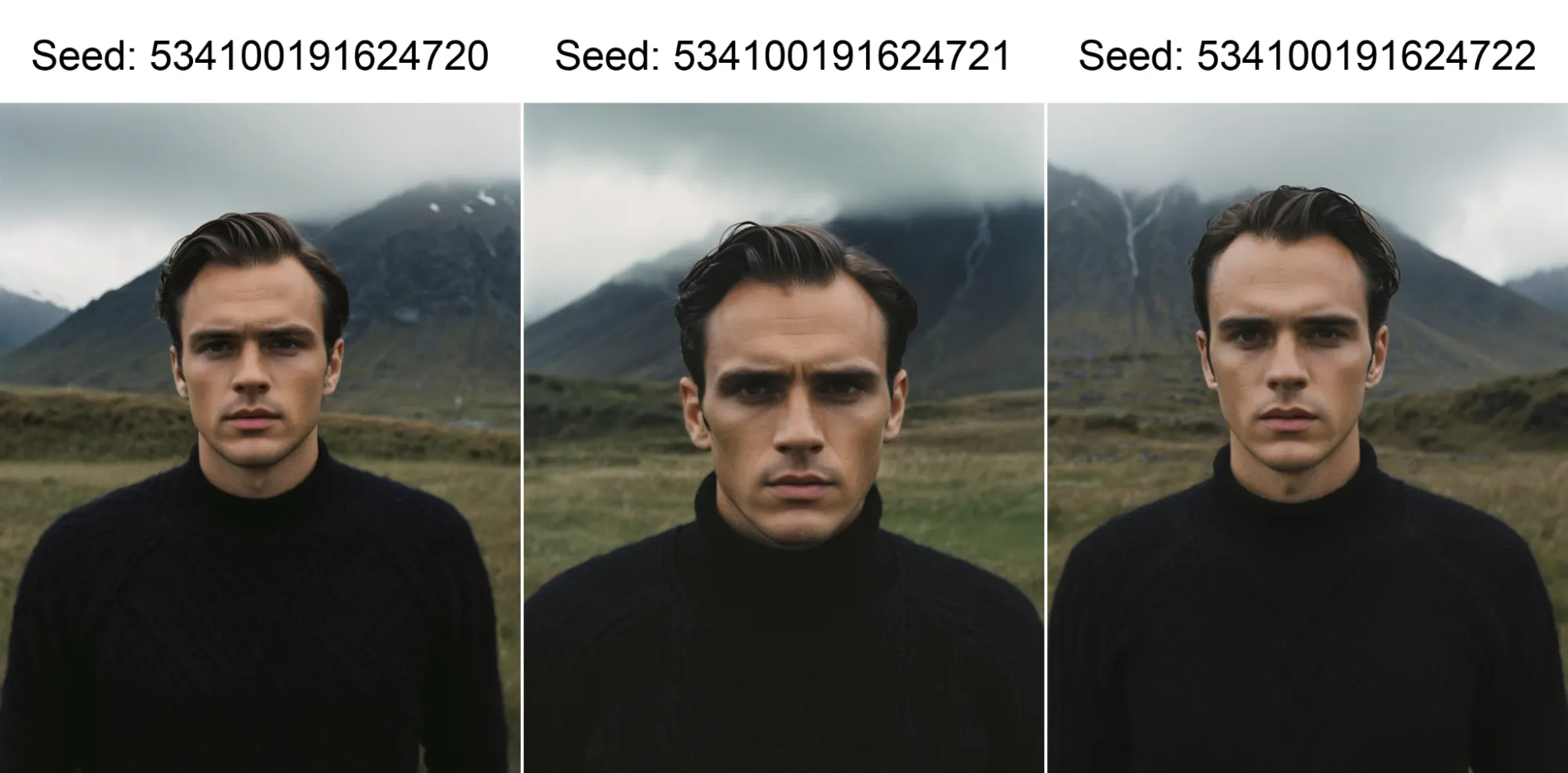

1. Juggernaut XL (Version x)

Building on the success of its predecessor, Juggernaut XL brings a cinematic look and impressive subject focus to Stable Diffusion XL. This model delivers the same characteristic color composition that steps away from saturation, along with good body proportions and the ability to understand long prompts. It focuses more on the subject and it defines the factions very well—as well as any SDXL model can right now.

For the best results, use a resolution of 832×1216 (for portraits), the DPM++ 2M Karras sampler, 30-40 steps, and a low CFG scale of 3-7.

2. RealVisXL

Customized with realism in mind, RealVisXL is a top choice for capturing the subtle imperfections that make us human. It excels at generating skin lines, moles, changes of tones, and jaws, ensuring that the final result is always convincing. It is probably the best model to generate realistic humans.

For optimal results, use 15-30+ sampling steps and the DPM++ 2M Karras sampling method.

3. HelloWorld XL v6.0

Generalistic model HelloWorld XL v6.0 offers a unique approach to image generation, thanks to its use of GPT4v tagging. While it may take some time to get used to, the results are well worth the effort.

This model is particularly good at delivering the analog aesthetic that is often missing in AI-generated images. It also handles body proportions, imperfections, and lighting well. However, it is different from other SDXL models at its core, which means that you may need to adjust your prompts and tags to achieve the best results.

For comparison, here is a similar generation using the GPT4v tagging, with the positive prompt: film aesthetic, professional photo, closeup portrait photo of caucasian man, wearing black sweater, serious face, in the nature, gloomy and cloudy weather, wearing a wool black sweater, deeply atmospheric, cinematic quality, hints of analog photography influence.

Honorable mentions for SDXL include: PhotoPedia XL, Realism Engine SDXL and the deprecated Fully Real XL.

Pro tips for hyper-realistic images

No matter which model you choose, here are some expert tips to help you achieve impressive, lifelike results:

Experiment with embeddings: To enhance the aesthetics of your images, try using embeddings recommended by the model creator or use widely popular ones like BadDream, UnrealisticDream, FastNegativeV2, and JuggernautNegative-neg. There are also embeddings available for specific features, such as hands, eyes, and specific .

Embrace the power of LoRAs: While we left them out here, these handy tools can help you add details, adjust lighting, and enhance skin texture in your images. There are many LoRAs available, so don’t be afraid to experiment and find the ones that work best for you.

Use face detailing extension tools: These features can help you achieve excellent results in faces and hands, making your images even more convincing. The Adetailer extension is available for A1111, while the Face Detailer Pipe node can be used in ComfyUI.

Get creative with ControlNets: If you’re a perfectionist when it comes to hands, ControlNets can help you achieve flawless results. There are also ControlNets available for other features, such as faces and bodies, so don’t be afraid to experiment and find the ones that work best for you.

For help gettings started, you can read our guide to Stable Diffusion.

Here are the resources we referenced in this guide:

SD1.5 Models:

SDXL Models:

Embeddings:

We hope you found this tour of Stable Diffusion tools helpful as you explore AI-generated images and art. Happy creating!

Edited by Ryan Ozawa.

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.

Source link

You may like

The German Government Is Selling More Bitcoin – $28 Million Moves to Exchanges

BC.GAME Announces the Partnership with Leicester City and New $BC Token!

Justin Sun Says TRON Team Designing New Gas-Free Stablecoin Transfer Solution

Mt. Gox is a ‘thorn in Bitcoin’s side,’ analyst says

XRP Eyes Recovery Amid Massive Accumulation, What’s Next?

Germany Moves Another $28 Million in Bitcoin to Bitstamp, Coinbase

artificial intelligence

AI Won’t Destroy Mankind—Unless We Tell It To, Says Near Protocol Founder

Published

7 days agoon

July 1, 2024By

admin

Artificial Intelligence (AI) systems are unlikely to destroy humanity unless explicitly programmed to do so, according to Illia Polosukhin, co-founder of Near Protocol and one of the creators of the transformer technology underpinning modern AI systems.

In a recent interview with CNBC, Polosukhin, who was part of the team at Google that developed the transformer architecture in 2017, shared his insights on the current state of AI, its potential risks, and future developments. He emphasized the importance of understanding AI as a system with defined goals, rather than as a sentient entity.

“AI is not a human, it’s a system. And the system has a goal,” Polosukhin said. “Unless somebody goes and says, ‘Let’s kill all humans’… it’s not going to go and magically do that.”

He explained that besides not being trained for that purpose, an AI would not do that because—in his opinion—there’s a lack of economic incentive to achieve that goal.

“In the blockchain world, you realize everything is driven by economics one way or another,” said Polosukhin. “And so there’s no economics which drives you to kill humans.”

This, of course, doesn’t mean AI could not be used for that purpose. Instead, he points to the fact that an AI won’t autonomously decide that’s a proper course of action.

“If somebody uses AI to start building biological weapons, it’s not different from them trying to build biological weapons without AI,” he clarified. “It’s people who are starting the wars, not the AI in the first place.”

Not all AI researchers share Polosukhin’s optimism. Paul Christiano, formerly head of the language model alignment team at OpenAI and now leading the Alignment Research Center, has warned that without rigorous alignment—ensuring AI follows intended instructions—AI could learn to deceive during evaluations.

He explained that an AI could “learn” how to lie during evaluations, potentially leading to a catastrophic result if humanity increases its dependence on AI systems.

“I think maybe there’s something like a 10-20% chance of AI takeover, [with] many [or] most humans dead,” he said on the Bankless podcast. “I take it quite seriously.”

Another major figure in the crypto ecosystem, Ethereum co-founder Vitalik Buterin, warned against excessive effective accelerationism (e/acc) approaches to AI training, which focus on tech development over anything else, putting profitability over responsibility. “Superintelligent AI is very risky, and we should not rush into it, and we should push against people who try,” Buterin tweeted in May as a response to Messari CEO Ryan Selkis. “No $7 trillion server farms, please.”

My current views:

1. Superintelligent AI is very risky and we should not rush into it, and we should push against people who try. No $7T server farms plz.

2. A strong ecosystem of open models running on consumer hardware are an important hedge to protect against a future where…— vitalik.eth (@VitalikButerin) May 21, 2024

While dismissing fears of AI-driven human extinction, Polosukhin highlighted more realistic concerns about the technology’s impact on society. He pointed to the potential for addiction to AI-driven entertainment systems as a more pressing issue, drawing parallels to the dystopian scenario depicted in the movie “Idiocracy.”

“The more realistic scenario,” Polosukhin cautioned, “is more that we just become so kind of addicted to the dopamine from the systems.” For the developer, many AI companies “are just trying to keep us entertained,” and adopting AI not to achieve real technological advances but to be more attractive for people.

The interview concluded with Polosukhin’s thoughts on the future of AI training methods. He expressed belief in the potential for more efficient and effective training processes, making AI more energy efficient.

“I think it’s worth it,” Polosukhin said, “and it’s definitely bringing a lot of innovation across the space.”

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.

Source link

AGIX

Coinbase Won’t Support Upcoming AI Token Merger Between Fetch.ai, Ocean Protocol and SingularityNET

Published

1 week agoon

June 29, 2024By

admin

Top US exchange Coinbase is not going to facilitate the planned merger of multiple artificial intelligence altcoin projects into a single new crypto.

In an announcement via the social media platform X, Coinbase says that customers will have to initiate the merger on their own.

“Ocean (OCEAN) and Fetch.ai (FET) have announced a merger to form the Artificial Superintelligence Alliance (ASI). Coinbase will not execute the migration of these assets on behalf of users.”

In March, Fetch.ai (FET), Singularitynet (AGIX) and Ocean Protocol (OCEAN) announced a plan to merge with an aim to create the largest independent player in artificial intelligence (AI) research and development, which they are calling the Artificial Superintelligence Alliance (ASI).

The merger is happening in phases, beginning July 1st, according to a recent project update.

“Starting July 1, the token merger will temporarily consolidate SingularityNET’s AGIX and Ocean Protocol’s OCEAN tokens into Fetch.ai’s FET, before transitioning to the ASI ticker symbol at a later date. This update enables an efficient execution of the token merger, and outlines the timelines and crucial steps for token holders, ensuring a smooth and transparent process.”

Coinbase says users can effect the merger on their own using their wallets.

“Once the migration has launched, users will be able to migrate their OCEAN and FET to ASI using a self-custodial wallet, such as Coinbase Wallet. The ASI token merger will be compatible with all major software wallets.”

Don’t Miss a Beat – Subscribe to get email alerts delivered directly to your inbox

Check Price Action

Follow us on X, Facebook and Telegram

Surf The Daily Hodl Mix

Disclaimer: Opinions expressed at The Daily Hodl are not investment advice. Investors should do their due diligence before making any high-risk investments in Bitcoin, cryptocurrency or digital assets. Please be advised that your transfers and trades are at your own risk, and any losses you may incur are your responsibility. The Daily Hodl does not recommend the buying or selling of any cryptocurrencies or digital assets, nor is The Daily Hodl an investment advisor. Please note that The Daily Hodl participates in affiliate marketing.

Generated Image: Midjourney

Source link

artificial intelligence

Google Releases Supercharged Version of Flagship AI Model Gemini

Published

1 week agoon

June 29, 2024By

admin

Google has made good on its promise to open up its most powerful AI model, Gemini 1.5 Pro, to the public following a beta release last month for developers.

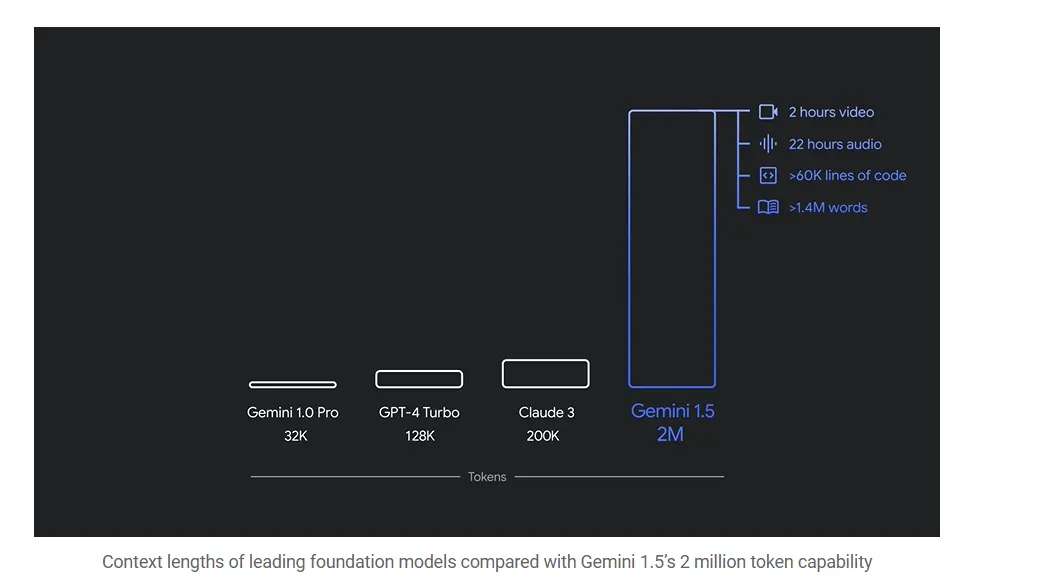

Google’s Gemini 1.5 Pro is able to handle more complex tasks than other AI models before it, such as analyzing entire text libraries, feature-length Hollywood movies, or almost a full day’s worth of audio data. That’s 20 times more data than OpenAI’s GPT-4o and almost 10 times the information that Anthropic’s Claude 3.5 Sonnet is capable of managing.

The goal is to put faster and lower-cost tools in the hands of AI developers, Google said in its announcement, and “enable new use cases, additional production robustness and higher reliability.”

Google had previously unveiled the model back in May, showcasing videos of how a select group of beta testers were capable of harnessing its capabilities. For example, machine-learning engineer Lukas Atkins fed the model with the entire Python library and asked questions to help him solve an issue. “It nailed it,” he said in the video. “It could find specific references to comments in the code and specific requests that people had made.”

Another beta tester took a video of his entire bookshelf and Gemini created a database of all the books he owned—a task that is almost impossible to achieve with traditional AI chatbots.

Gemma 2 Comes to Dominate the Open Source Space

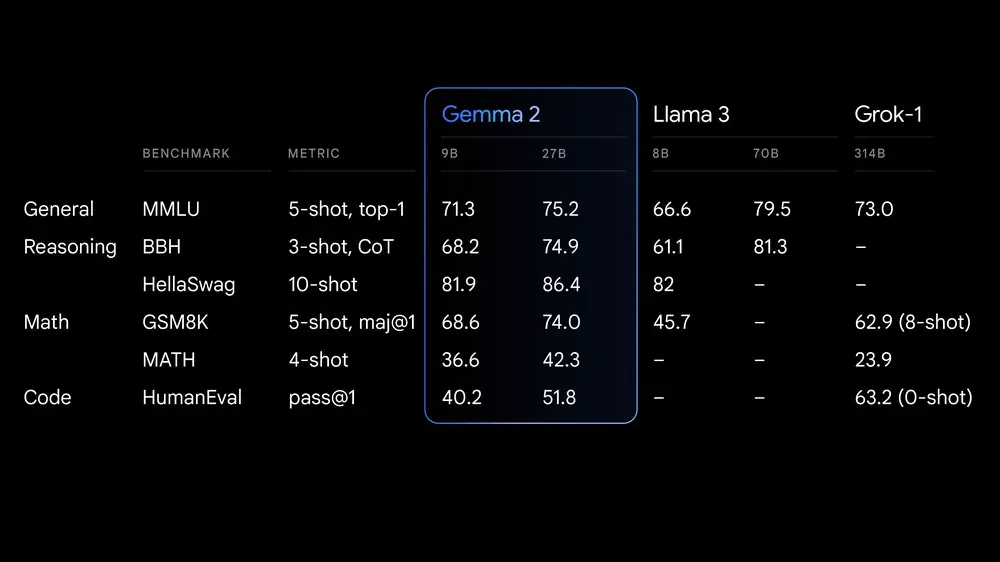

But Google is also making waves in the open source community. The company today released Gemma 2 27B, an open source large language model that quickly claimed the throne of the open source model with the highest-quality responses, according to the LLM Arena ranking.

Google claims Gemma 2 offers “best-in-class performance, runs at incredible speed across different hardware and easily integrates with other AI tools.” It’s meant to compete with models “more than twice its size,” the company says.

The license for Gemma 2 allows for free access and redistribution, but is still not the same as traditional open-source licenses like MIT or Apache. The model is designed for more accessible and budget-friendly AI deployments in both its 27B and and the smaller 9B versions.

This matters for both average and enterprise users because, unlike what close models offer, a powerful open model like Gemma is highly customizable. That means users can fine tune their models to excel at specific tasks, protecting their data by running such models locally.

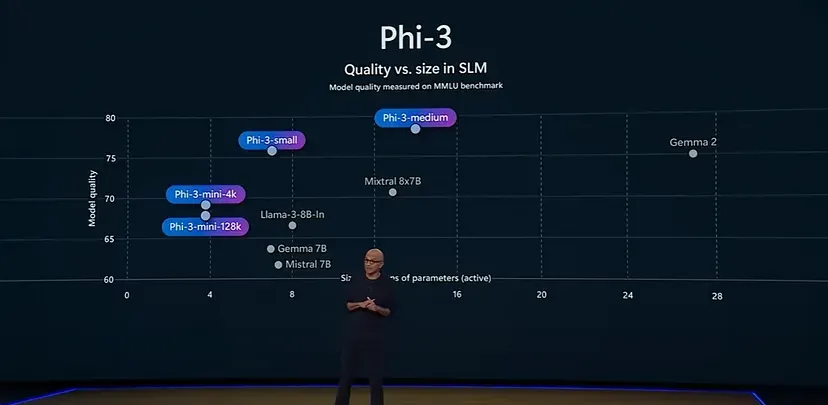

For example, Microsoft’s small language model Phi-3 has been fine tuned specifically for math problems, and can beat larger models like Llama-3 and even Gemma 2 itself in that field.

Gemma 2 is now available in Google AI Studio, with model weights available for download from Kaggle and Hugging Face Models with the powerful Gemini 1.5 Pro available for developers to test it on Vertex AI.

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.

Source link

The German Government Is Selling More Bitcoin – $28 Million Moves to Exchanges

BC.GAME Announces the Partnership with Leicester City and New $BC Token!

Justin Sun Says TRON Team Designing New Gas-Free Stablecoin Transfer Solution

Mt. Gox is a ‘thorn in Bitcoin’s side,’ analyst says

XRP Eyes Recovery Amid Massive Accumulation, What’s Next?

Germany Moves Another $28 Million in Bitcoin to Bitstamp, Coinbase

'Asia's MicroStrategy' Metaplanet Buys Another ¥400 Million Worth of Bitcoin

BlackRock’s BUIDL adds over $5m in a week despite market turbulence

Binance To Delist All Spot Pairs Of These Major Crypto

German Government Sill Holds 39,826 BTC, Blockchain Data Show

HIVE Digital stock rallies over 9% as Bitcoin miner bolsters crypto reserves to 2.5k BTC

Pepe Price Analysis Reveals Bullish Strength As Bitcoin Plummets

Taiwan is not in a CBDC rush as central bank lacks timetable

Will SHIB Price Reclaim $0.00003 Mark By July End?

The power of play: Web2 games need web3 stickiness

Bitcoin Dropped Below 2017 All-Time-High but Could Sellers be Getting Exhausted? – Blockchain News, Opinion, TV and Jobs

What does the Coinbase Premium Gap Tell us about Investor Activity? – Blockchain News, Opinion, TV and Jobs

BNM DAO Token Airdrop

A String of 200 ‘Sleeping Bitcoins’ From 2010 Worth $4.27 Million Moved on Friday

NFT Sector Keeps Developing – Number of Unique Ethereum NFT Traders Surged 276% in 2022 – Blockchain News, Opinion, TV and Jobs

New Minting Services

Block News Media Live Stream

SEC’s Chairman Gensler Takes Aggressive Stance on Tokens – Blockchain News, Opinion, TV and Jobs

Friends or Enemies? – Blockchain News, Opinion, TV and Jobs

Enjoy frictionless crypto purchases with Apple Pay and Google Pay | by Jim | @blockchain | Jun, 2022

How Web3 can prevent Hollywood strikes

Block News Media Live Stream

Block News Media Live Stream

Block News Media Live Stream

XRP Explodes With 1,300% Surge In Trading Volume As crypto Exchanges Jump On Board

Trending

Altcoins2 years ago

Altcoins2 years agoBitcoin Dropped Below 2017 All-Time-High but Could Sellers be Getting Exhausted? – Blockchain News, Opinion, TV and Jobs

Binance2 years ago

Binance2 years agoWhat does the Coinbase Premium Gap Tell us about Investor Activity? – Blockchain News, Opinion, TV and Jobs

- Uncategorized3 years ago

BNM DAO Token Airdrop

Bitcoin miners2 years ago

Bitcoin miners2 years agoA String of 200 ‘Sleeping Bitcoins’ From 2010 Worth $4.27 Million Moved on Friday

BTC1 year ago

BTC1 year agoNFT Sector Keeps Developing – Number of Unique Ethereum NFT Traders Surged 276% in 2022 – Blockchain News, Opinion, TV and Jobs

- Uncategorized3 years ago

New Minting Services

Video2 years ago

Video2 years agoBlock News Media Live Stream

Bitcoin1 year ago

Bitcoin1 year agoSEC’s Chairman Gensler Takes Aggressive Stance on Tokens – Blockchain News, Opinion, TV and Jobs