artificial intelligence

Scammers Are Using AI Phishing and ‘Juice Jacking’ to Target Travelers

Published

3 weeks agoon

By

admin

With the summer travel season ramping up and travelers hitting the road, cybercriminals are turning to new tech to execute scams and steal data, from artificial intelligence email attacks to fake smartphone chargers that ensnare power-hungry travelers.

The number of phishing email attacks has increased by 856% over the last year, according to a recent report by cybersecurity firm SlashNext, which said the surge is driven in part by generative AI. The tech allows scammers to craft phishing emails in multiple languages at the same time, leading to a 4151% increase in malicious emails since the launch of ChatGPT in 2022.

“A threat actor can prompt AI to write an email very quickly, and in any language, with almost zero cost,” SlashNext CEO Patrick Harr told Decrypt in an interview. “You will see these [phishing emails] are not just in English only—I can write in a number of languages and target a number of people in different parts of the world, and I can do it literally within seconds.”

A recent report by the International Business Times highlighted a sharp increase in phishing attacks targeting both business and leisure travelers with fake website listings and offering massive discounts—for example, an offering of $200 a night in the Swiss Alps when other sites say $1,000 a night.

“If there’s even a little bit of doubt, call the property, hosts, and customer support,” Booking.com’s chief information security officer Marnie Wilking told IBT.

Booking.com did not immediately respond to a request for comment from Decrypt.

A phishing attack involves messages sent to unsuspecting victims who click on a link that connects to a malicious website or application, tricking users to submit personal or security information, such as passwords.

In January, cybercriminals targeted crypto email lists using the Mailerlite service, taking over $700,000 from phishing victims.

A newer form of phishing, “smishing” or text message phishing, Harr said, is an increasingly popular and dangerous way to attack mobile phones.

“We have obviously shifted to a mobile world long ago and people are so used to using text messages, and these bad actors always go to where you’re comfortable and try to interject themselves,” Harr said. “The thing we’ve seen as a change inside of ‘smishing’ is it’s no longer just a ‘click here’ because your gift package is on the doorstep.”

After businesses embraced QR codes during the COVID-19 pandemic, Harr said the ubiquitous symbols are now being deployed by scammers.

“80% of all phones have really no protection at all from phishing,” Harr said, citing a recent report by Verizon. “So that’s the reason why they’re using QR codes—trying to either get you to pay for something, reveal sensitive information about yourself, or steal your password.”

Juice jacking

While phishing attacks remain far and away the most prevalent attack vector used by cybercriminals, the U.S. Federal Communications Commission (FCC) recently issued a warning about “juice jacking,” which often targets travelers looking to recharge their devices at airports and hotels.

Attackers are taking advantage of the technology built into the universal USB standard, which provides for transmitting power as well as data. A maliciously configured USB port or cable could, when plugged into a victim’s device, steal information or install unwanted software.

Avoid using free charging stations in airports, hotels or shopping centers. Bad actors have figured out ways to use public USB ports to introduce malware and monitoring software onto devices. Carry your own charger and USB cord and use an electrical outlet instead. pic.twitter.com/9T62SYen9T

— FBI Denver (@FBIDenver) April 6, 2023

To avoid this emerging type of attack, the FCC suggests using personal chargers plugged into basic power outlets, using portable batteries, or using data blockers that ensure a USB connection is limited only to power transfer.

Year-round vigilance

Decrypt reached out to the U.S. Cybersecurity and Infrastructure Security Agency (CISA) for more advice.

A CISA spokesperson pointed to resources it provides to help consumers better protect themselves from phishing scams, including recognizing common phishing signs like urgent or emotional language, requests for personal information, and incorrect email addresses.

Misspelled words used to be a clear sign of a phishing attack, but the CISA said this was no longer the case due to the widespread use of AI.

“This isn’t just for summer, this is something people can do all year round to be more secure,” the CISA spokesperson told Decrypt.

Edited by Ryan Ozawa.

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.

Source link

You may like

The German Government Is Selling More Bitcoin – $28 Million Moves to Exchanges

BC.GAME Announces the Partnership with Leicester City and New $BC Token!

Justin Sun Says TRON Team Designing New Gas-Free Stablecoin Transfer Solution

Mt. Gox is a ‘thorn in Bitcoin’s side,’ analyst says

XRP Eyes Recovery Amid Massive Accumulation, What’s Next?

Germany Moves Another $28 Million in Bitcoin to Bitstamp, Coinbase

artificial intelligence

AI Won’t Destroy Mankind—Unless We Tell It To, Says Near Protocol Founder

Published

7 days agoon

July 1, 2024By

admin

Artificial Intelligence (AI) systems are unlikely to destroy humanity unless explicitly programmed to do so, according to Illia Polosukhin, co-founder of Near Protocol and one of the creators of the transformer technology underpinning modern AI systems.

In a recent interview with CNBC, Polosukhin, who was part of the team at Google that developed the transformer architecture in 2017, shared his insights on the current state of AI, its potential risks, and future developments. He emphasized the importance of understanding AI as a system with defined goals, rather than as a sentient entity.

“AI is not a human, it’s a system. And the system has a goal,” Polosukhin said. “Unless somebody goes and says, ‘Let’s kill all humans’… it’s not going to go and magically do that.”

He explained that besides not being trained for that purpose, an AI would not do that because—in his opinion—there’s a lack of economic incentive to achieve that goal.

“In the blockchain world, you realize everything is driven by economics one way or another,” said Polosukhin. “And so there’s no economics which drives you to kill humans.”

This, of course, doesn’t mean AI could not be used for that purpose. Instead, he points to the fact that an AI won’t autonomously decide that’s a proper course of action.

“If somebody uses AI to start building biological weapons, it’s not different from them trying to build biological weapons without AI,” he clarified. “It’s people who are starting the wars, not the AI in the first place.”

Not all AI researchers share Polosukhin’s optimism. Paul Christiano, formerly head of the language model alignment team at OpenAI and now leading the Alignment Research Center, has warned that without rigorous alignment—ensuring AI follows intended instructions—AI could learn to deceive during evaluations.

He explained that an AI could “learn” how to lie during evaluations, potentially leading to a catastrophic result if humanity increases its dependence on AI systems.

“I think maybe there’s something like a 10-20% chance of AI takeover, [with] many [or] most humans dead,” he said on the Bankless podcast. “I take it quite seriously.”

Another major figure in the crypto ecosystem, Ethereum co-founder Vitalik Buterin, warned against excessive effective accelerationism (e/acc) approaches to AI training, which focus on tech development over anything else, putting profitability over responsibility. “Superintelligent AI is very risky, and we should not rush into it, and we should push against people who try,” Buterin tweeted in May as a response to Messari CEO Ryan Selkis. “No $7 trillion server farms, please.”

My current views:

1. Superintelligent AI is very risky and we should not rush into it, and we should push against people who try. No $7T server farms plz.

2. A strong ecosystem of open models running on consumer hardware are an important hedge to protect against a future where…— vitalik.eth (@VitalikButerin) May 21, 2024

While dismissing fears of AI-driven human extinction, Polosukhin highlighted more realistic concerns about the technology’s impact on society. He pointed to the potential for addiction to AI-driven entertainment systems as a more pressing issue, drawing parallels to the dystopian scenario depicted in the movie “Idiocracy.”

“The more realistic scenario,” Polosukhin cautioned, “is more that we just become so kind of addicted to the dopamine from the systems.” For the developer, many AI companies “are just trying to keep us entertained,” and adopting AI not to achieve real technological advances but to be more attractive for people.

The interview concluded with Polosukhin’s thoughts on the future of AI training methods. He expressed belief in the potential for more efficient and effective training processes, making AI more energy efficient.

“I think it’s worth it,” Polosukhin said, “and it’s definitely bringing a lot of innovation across the space.”

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.

Source link

AGIX

Coinbase Won’t Support Upcoming AI Token Merger Between Fetch.ai, Ocean Protocol and SingularityNET

Published

1 week agoon

June 29, 2024By

admin

Top US exchange Coinbase is not going to facilitate the planned merger of multiple artificial intelligence altcoin projects into a single new crypto.

In an announcement via the social media platform X, Coinbase says that customers will have to initiate the merger on their own.

“Ocean (OCEAN) and Fetch.ai (FET) have announced a merger to form the Artificial Superintelligence Alliance (ASI). Coinbase will not execute the migration of these assets on behalf of users.”

In March, Fetch.ai (FET), Singularitynet (AGIX) and Ocean Protocol (OCEAN) announced a plan to merge with an aim to create the largest independent player in artificial intelligence (AI) research and development, which they are calling the Artificial Superintelligence Alliance (ASI).

The merger is happening in phases, beginning July 1st, according to a recent project update.

“Starting July 1, the token merger will temporarily consolidate SingularityNET’s AGIX and Ocean Protocol’s OCEAN tokens into Fetch.ai’s FET, before transitioning to the ASI ticker symbol at a later date. This update enables an efficient execution of the token merger, and outlines the timelines and crucial steps for token holders, ensuring a smooth and transparent process.”

Coinbase says users can effect the merger on their own using their wallets.

“Once the migration has launched, users will be able to migrate their OCEAN and FET to ASI using a self-custodial wallet, such as Coinbase Wallet. The ASI token merger will be compatible with all major software wallets.”

Don’t Miss a Beat – Subscribe to get email alerts delivered directly to your inbox

Check Price Action

Follow us on X, Facebook and Telegram

Surf The Daily Hodl Mix

Disclaimer: Opinions expressed at The Daily Hodl are not investment advice. Investors should do their due diligence before making any high-risk investments in Bitcoin, cryptocurrency or digital assets. Please be advised that your transfers and trades are at your own risk, and any losses you may incur are your responsibility. The Daily Hodl does not recommend the buying or selling of any cryptocurrencies or digital assets, nor is The Daily Hodl an investment advisor. Please note that The Daily Hodl participates in affiliate marketing.

Generated Image: Midjourney

Source link

artificial intelligence

Google Releases Supercharged Version of Flagship AI Model Gemini

Published

1 week agoon

June 29, 2024By

admin

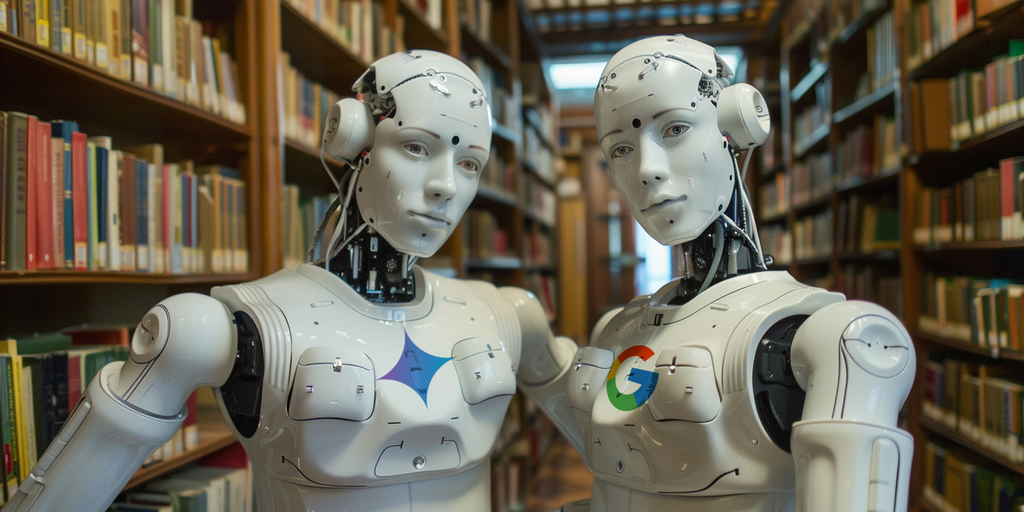

Google has made good on its promise to open up its most powerful AI model, Gemini 1.5 Pro, to the public following a beta release last month for developers.

Google’s Gemini 1.5 Pro is able to handle more complex tasks than other AI models before it, such as analyzing entire text libraries, feature-length Hollywood movies, or almost a full day’s worth of audio data. That’s 20 times more data than OpenAI’s GPT-4o and almost 10 times the information that Anthropic’s Claude 3.5 Sonnet is capable of managing.

The goal is to put faster and lower-cost tools in the hands of AI developers, Google said in its announcement, and “enable new use cases, additional production robustness and higher reliability.”

Google had previously unveiled the model back in May, showcasing videos of how a select group of beta testers were capable of harnessing its capabilities. For example, machine-learning engineer Lukas Atkins fed the model with the entire Python library and asked questions to help him solve an issue. “It nailed it,” he said in the video. “It could find specific references to comments in the code and specific requests that people had made.”

Another beta tester took a video of his entire bookshelf and Gemini created a database of all the books he owned—a task that is almost impossible to achieve with traditional AI chatbots.

Gemma 2 Comes to Dominate the Open Source Space

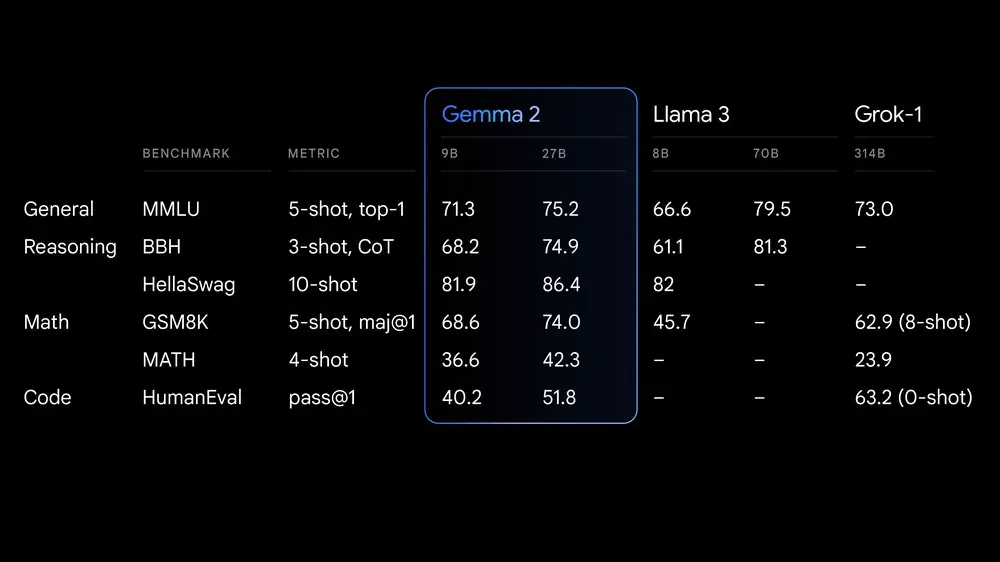

But Google is also making waves in the open source community. The company today released Gemma 2 27B, an open source large language model that quickly claimed the throne of the open source model with the highest-quality responses, according to the LLM Arena ranking.

Google claims Gemma 2 offers “best-in-class performance, runs at incredible speed across different hardware and easily integrates with other AI tools.” It’s meant to compete with models “more than twice its size,” the company says.

The license for Gemma 2 allows for free access and redistribution, but is still not the same as traditional open-source licenses like MIT or Apache. The model is designed for more accessible and budget-friendly AI deployments in both its 27B and and the smaller 9B versions.

This matters for both average and enterprise users because, unlike what close models offer, a powerful open model like Gemma is highly customizable. That means users can fine tune their models to excel at specific tasks, protecting their data by running such models locally.

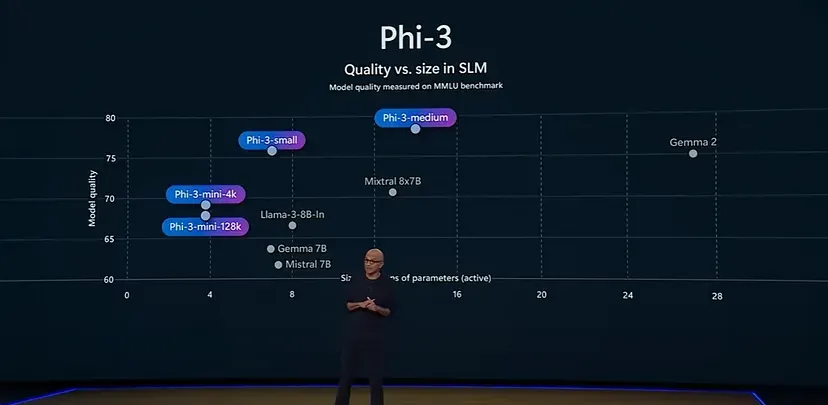

For example, Microsoft’s small language model Phi-3 has been fine tuned specifically for math problems, and can beat larger models like Llama-3 and even Gemma 2 itself in that field.

Gemma 2 is now available in Google AI Studio, with model weights available for download from Kaggle and Hugging Face Models with the powerful Gemini 1.5 Pro available for developers to test it on Vertex AI.

Generally Intelligent Newsletter

A weekly AI journey narrated by Gen, a generative AI model.

Source link

The German Government Is Selling More Bitcoin – $28 Million Moves to Exchanges

BC.GAME Announces the Partnership with Leicester City and New $BC Token!

Justin Sun Says TRON Team Designing New Gas-Free Stablecoin Transfer Solution

Mt. Gox is a ‘thorn in Bitcoin’s side,’ analyst says

XRP Eyes Recovery Amid Massive Accumulation, What’s Next?

Germany Moves Another $28 Million in Bitcoin to Bitstamp, Coinbase

'Asia's MicroStrategy' Metaplanet Buys Another ¥400 Million Worth of Bitcoin

BlackRock’s BUIDL adds over $5m in a week despite market turbulence

Binance To Delist All Spot Pairs Of These Major Crypto

German Government Sill Holds 39,826 BTC, Blockchain Data Show

HIVE Digital stock rallies over 9% as Bitcoin miner bolsters crypto reserves to 2.5k BTC

Pepe Price Analysis Reveals Bullish Strength As Bitcoin Plummets

Taiwan is not in a CBDC rush as central bank lacks timetable

Will SHIB Price Reclaim $0.00003 Mark By July End?

The power of play: Web2 games need web3 stickiness

Bitcoin Dropped Below 2017 All-Time-High but Could Sellers be Getting Exhausted? – Blockchain News, Opinion, TV and Jobs

What does the Coinbase Premium Gap Tell us about Investor Activity? – Blockchain News, Opinion, TV and Jobs

BNM DAO Token Airdrop

A String of 200 ‘Sleeping Bitcoins’ From 2010 Worth $4.27 Million Moved on Friday

NFT Sector Keeps Developing – Number of Unique Ethereum NFT Traders Surged 276% in 2022 – Blockchain News, Opinion, TV and Jobs

New Minting Services

Block News Media Live Stream

SEC’s Chairman Gensler Takes Aggressive Stance on Tokens – Blockchain News, Opinion, TV and Jobs

Friends or Enemies? – Blockchain News, Opinion, TV and Jobs

Enjoy frictionless crypto purchases with Apple Pay and Google Pay | by Jim | @blockchain | Jun, 2022

How Web3 can prevent Hollywood strikes

Block News Media Live Stream

Block News Media Live Stream

Block News Media Live Stream

XRP Explodes With 1,300% Surge In Trading Volume As crypto Exchanges Jump On Board

Trending

Altcoins2 years ago

Altcoins2 years agoBitcoin Dropped Below 2017 All-Time-High but Could Sellers be Getting Exhausted? – Blockchain News, Opinion, TV and Jobs

Binance2 years ago

Binance2 years agoWhat does the Coinbase Premium Gap Tell us about Investor Activity? – Blockchain News, Opinion, TV and Jobs

- Uncategorized3 years ago

BNM DAO Token Airdrop

Bitcoin miners2 years ago

Bitcoin miners2 years agoA String of 200 ‘Sleeping Bitcoins’ From 2010 Worth $4.27 Million Moved on Friday

BTC1 year ago

BTC1 year agoNFT Sector Keeps Developing – Number of Unique Ethereum NFT Traders Surged 276% in 2022 – Blockchain News, Opinion, TV and Jobs

- Uncategorized3 years ago

New Minting Services

Video2 years ago

Video2 years agoBlock News Media Live Stream

Bitcoin1 year ago

Bitcoin1 year agoSEC’s Chairman Gensler Takes Aggressive Stance on Tokens – Blockchain News, Opinion, TV and Jobs